Almost all Linux distributions are shipped with Linux bridge. In this lab I will demonstrate how to setup a Linux bridge with VMs attached to it simulating as hosts. I will also show how to add basic firewall rule to bridge

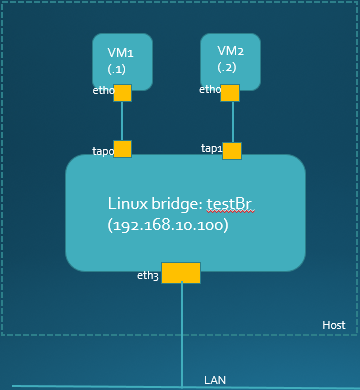

Topology:

My test bridge mapped to physical interface and two virtual interfaces. VMs are connected to virtual interfaces on bridge. I am using Linux Mint on VMs. Please note that mapping to physical interface is optional it is required only if you need external connectivity for your VMs

Pre-condition:

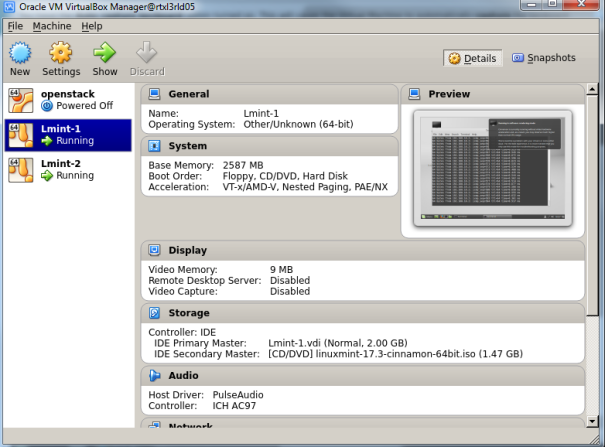

- Linux bridge package (able to run brctl command), virtual box and your favorite VM image. I like Linux Mint because it is light weight and boots fast

- sudo apt-get install uml-utilities bridge-utils

Procedure:

- Try bridge command

sjakhwal@rtxl3rld05:~$ sudo brctl --help

Usage: brctl [commands]

commands:

addbr <bridge> add bridge

delbr <bridge> delete bridge

addif <bridge> <device> add interface to bridge

delif <bridge> <device> delete interface from bridge

hairpin <bridge> <port> {on|off} turn hairpin on/off

setageing <bridge> <time> set ageing time

setbridgeprio <bridge> <prio> set bridge priority

setfd <bridge> <time> set bridge forward delay

sethello <bridge> <time> set hello time

setmaxage <bridge> <time> set max message age

setpathcost <bridge> <port> <cost> set path cost

setportprio <bridge> <port> <prio> set port priority

show [ <bridge> ] show a list of bridges

showmacs <bridge> show a list of mac addrs

showstp <bridge> show bridge stp info

stp <bridge> {on|off} turn stp on/off

- Create a test bridge. Note: virbr0 is a default bridge created by Linux

sjakhwal@rtxl3rld05:~$ sudo brctl addbr testBr sjakhwal@rtxl3rld05:~$ sudo brctl show bridge name bridge id STP enabled interfaces testBr 8000.000acd27b824 no virbr0 8000.000000000000 yes sjakhwal@rtxl3rld05:~$ ip addr 10: testBr: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default link/ether 00:0a:cd:27:b8:24 brd ff:ff:ff:ff:ff:ff

- Bring the test bridge to UP state

sjakhwal@rtxl3rld05:~$ sudo ip link set testBr up sjakhwal@rtxl3rld05:~$ ip addr 10: testBr: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 00:0a:cd:27:b8:24 brd ff:ff:ff:ff:ff:ff inet6 fe80::20a:cdff:fe27:b824/64 scope link valid_lft forever preferred_lft forever

- Attach a physical port to test bridge. In this case I am using eth3 physical port. Note: This step is needed only if you are planning to have external connectivity to your bridge, otherwise you can skip it

sjakhwal@rtxl3rld05:~$ ifconfig eth3 eth3 Link encap:Ethernet HWaddr 00:0a:cd:27:b8:24 inet6 addr: fe80::20a:cdff:fe27:b824/64 Scope:Link UP BROADCAST RUNNING PROMISC MULTICAST MTU:1500 Metric:1 RX packets:3413 errors:0 dropped:283 overruns:0 frame:0 TX packets:8 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:1352737 (1.3 MB) TX bytes:648 (648.0 B) sjakhwal@rtxl3rld05:~$ sjakhwal@rtxl3rld05:~$ sudo brctl addif testBr eth3 sjakhwal@rtxl3rld05:~$ sudo brctl show bridge name bridge id STP enabled interfaces testBr 8000.000acd27b824 no eth3 virbr0 8000.000000000000 yes

- To make setting persistent we need to update interfaces file.

- I am using Ubuntu so my changes are in /etc/network/interfaces, in case of Centos you need to create ifcfg-testBr file under /etc/sysconfig/network-scripts.

- restart network manager to activate changes $sudo service network-manager restart

Please note that IP address has moved from physical interface to bridge. There is no IP address provisioned on physical interface

sjakhwal@rtxl3rld05:~$cat interfaces auto eth3 iface eth3 inet manual pre-up ifconfig eth3 up ## test bridge auto testBr iface testBr inet static bridge_ports eth3 bridge_stp off bridge_fd 0.0 address 192.168.10.100 netmask 255.255.255.0 network 192.168.10.0 broadcast 192.168.10.255

- Make sure bridge gets the IP address and UP & RUNNING

sjakhwal@rtxl3rld05:/etc/network$ ifconfig testBr testBr Link encap:Ethernet HWaddr 00:0a:cd:27:b8:24 inet addr:192.168.10.100 Bcast:192.168.10.255 Mask:255.255.255.0 inet6 addr: fe80::20a:cdff:fe27:b824/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:64 errors:0 dropped:0 overruns:0 frame:0 TX packets:8 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:11747 (11.7 KB) TX bytes:648 (648.0 B) sjakhwal@rtxl3rld05:~$ ip addr 5: testBr: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 00:0a:cd:27:b8:24 brd ff:ff:ff:ff:ff:ff inet 192.168.10.100/24 brd 192.168.10.255 scope global testBr valid_lft forever preferred_lft forever inet6 fe80::20a:cdff:fe27:b824/64 scope link valid_lft forever preferred_lft forever sjakhwal@rtxl3rld05:~$ ifconfig eth3 eth3 Link encap:Ethernet HWaddr 00:0a:cd:27:b8:24 UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:15 errors:0 dropped:0 overruns:0 frame:0 TX packets:2330 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:1206 (1.2 KB) TX bytes:447039 (447.0 KB)

- Create two virtual interfaces and connect them to test bridge.

sjakhwal@rtxl3rld05:~$ sudo tunctl Set 'tap0' persistent and owned by uid 0 sjakhwal@rtxl3rld05:$ sudo tunctl Set 'tap1' persistent and owned by uid 0 sjakhwal@rtxl3rld05:/sbin$ ip addr 16: tap0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 500 link/ether de:57:03:0e:b7:ba brd ff:ff:ff:ff:ff:ff 17: tap1: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 500 link/ether 22:f8:6e:cf:0e:7a brd ff:ff:ff:ff:ff:ff sjakhwal@rtxl3rld05:$ sudo ip link set tap0 up sjakhwal@rtxl3rld05:$ sudo ip link set tap1 up sjakhwal@rtxl3rld05:~$ sudo brctl addif testBr tap0 sjakhwal@rtxl3rld05:~$ sudo brctl addif testBr tap1 sjakhwal@rtxl3rld05:$ sudo brctl show bridge name bridge id STP enabled interfaces testBr 8000.000acd27b824 no eth3 tap0 tap1 virbr0 8000.000000000000 yes

- At this point we have 3 ports bridge. We need host to connect to bridge ports so we can test our bridge. Below step is to simulate virtual host using VMs.

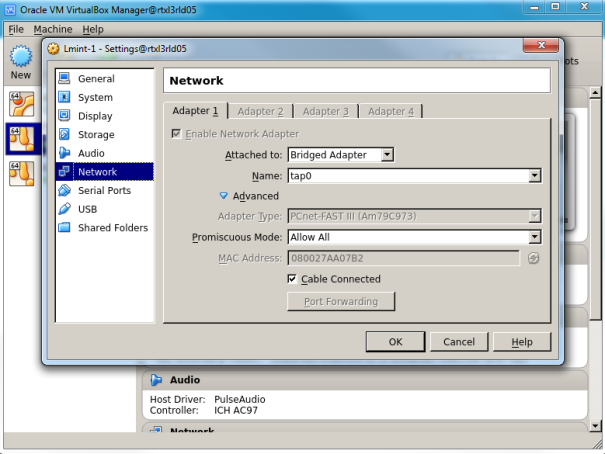

- On the virtual box start two VMs. I am using Linux mint for my VMs. In the virtual box under setting select ‘Attached to’ as Bridge Adopter and under name drop down select ‘tap0’ for first VM and ‘tap1’ for second VM. Set promiscuous mode to ‘allow all’

- Setup IP addresses on VMs

- VM1: sudo ifconfig eth0 192.168.10.1 up

- VM2:sudo ifconfig eth0 192.168.10.2 up

- Ping from VM1 to VM2. Ping is successful

- Ping to VMs from host machine. Ping is successful

sjakhwal@rtxl3rld05:/etc/network$ ip addr 24: tap0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master testBr state UP group default qlen 500 link/ether ce:b5:77:bd:f5:79 brd ff:ff:ff:ff:ff:ff inet6 fe80::ccb5:77ff:febd:f579/64 scope link valid_lft forever preferred_lft forever 25: tap1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master testBr state UP group default qlen 500 link/ether 9e:53:00:9c:cb:c7 brd ff:ff:ff:ff:ff:ff inet6 fe80::9c53:ff:fe9c:cbc7/64 scope link valid_lft forever preferred_lft forever sjakhwal@rtxl3rld05:/etc/network$ ping 192.168.10.1 PING 192.168.10.1 (192.168.10.1) 56(84) bytes of data. 64 bytes from 192.168.10.1: icmp_seq=1 ttl=64 time=0.506 ms 64 bytes from 192.168.10.1: icmp_seq=2 ttl=64 time=0.352 ms 64 bytes from 192.168.10.1: icmp_seq=3 ttl=64 time=0.340 ms 64 bytes from 192.168.10.1: icmp_seq=4 ttl=64 time=0.334 ms 64 bytes from 192.168.10.1: icmp_seq=5 ttl=64 time=0.397 ms ^C --- 192.168.10.1 ping statistics --- 5 packets transmitted, 5 received, 0% packet loss, time 3998ms

- Just to prove that our VMs are in fact connected to tap interfaces lets try some tests. Disable tap interface and check ping connectivity to VM

sjakhwal@rtxl3rld05:/etc/network$ ping 192.168.10.1 PING 192.168.10.1 (192.168.10.1) 56(84) bytes of data. 64 bytes from 192.168.10.1: icmp_seq=1 ttl=64 time=0.162 ms 64 bytes from 192.168.10.1: icmp_seq=2 ttl=64 time=0.211 ms ^C --- 192.168.10.1 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1000ms rtt min/avg/max/mdev = 0.162/0.186/0.211/0.028 ms #disable tap interface sjakhwal@rtxl3rld05:/etc/network$ sudo ip link set tap0 down [sudo] password for sjakhwal: sjakhwal@rtxl3rld05:/etc/network$ ping 192.168.10.1 PING 192.168.10.1 (192.168.10.1) 56(84) bytes of data. ^C --- 192.168.10.1 ping statistics --- 6 packets transmitted, 0 received, 100% packet loss, time 5039ms sjakhwal@rtxl3rld05:/etc/network$ ip addr 24: tap0: <BROADCAST,MULTICAST> mtu 1500 qdisc pfifo_fast master testBr state DOWN group default qlen 500 link/ether ce:b5:77:bd:f5:79 brd ff:ff:ff:ff:ff:ff 25: tap1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master testBr state UP group default qlen 500 link/ether 9e:53:00:9c:cb:c7 brd ff:ff:ff:ff:ff:ff inet6 fe80::9c53:ff:fe9c:cbc7/64 scope link valid_lft forever preferred_lft forever #enable tap interface sjakhwal@rtxl3rld05:/etc/network$ sudo ip link set tap0 up sjakhwal@rtxl3rld05:/etc/network$ ping 192.168.10.1 PING 192.168.10.1 (192.168.10.1) 56(84) bytes of data. 64 bytes from 192.168.10.1: icmp_seq=1 ttl=64 time=0.405 ms 64 bytes from 192.168.10.1: icmp_seq=2 ttl=64 time=0.165 ms 64 bytes from 192.168.10.1: icmp_seq=3 ttl=64 time=0.234 ms 64 bytes from 192.168.10.1: icmp_seq=4 ttl=64 time=0.299 ms ^C --- 192.168.10.1 ping statistics --- 4 packets transmitted, 4 received, 0% packet loss, time 2999ms rtt min/avg/max/mdev = 0.165/0.275/0.405/0.090 ms

Firewalling to Linux bridge is provided by ebtables. Ebtables firewall applies to Link layer so filtering is based on MAC only. Lets try some basic rules

- FORWARD chain: Forward chain applied when traffic goes from one port to another port in a bridge, in our case between VMs

- Add MAC filter rule so traffic from VM1 to VM2 dropped, I am using VM1 source MAC to filter the traffic. run ping from VM1 to VM2 make sure ping dropped

sjakhwal@rtxl3rld05:/etc/network$ sudo ebtables -A FORWARD -s 8:0:27:aa:7:b2 -j DROP sjakhwal@rtxl3rld05:/etc/network$ sudo ebtables -L Bridge table: filter Bridge chain: INPUT, entries: 0, policy: ACCEPT Bridge chain: FORWARD, entries: 1, policy: ACCEPT -s 8:0:27:aa:7:b2 -j DROP Bridge chain: OUTPUT, entries: 0, policy: ACCEPT sjakhwal@rtxl3rld05:/etc/network$ sudo ebtables -L --Lc Bridge table: filter Bridge chain: INPUT, entries: 0, policy: ACCEPT Bridge chain: FORWARD, entries: 1, policy: ACCEPT -s 8:0:27:aa:7:b2 -j DROP , pcnt = 0 -- bcnt = 0 Bridge chain: OUTPUT, entries: 0, policy: ACCEPT sjakhwal@rtxl3rld05:/etc/network$ sudo ebtables -L --Lc Bridge table: filter Bridge chain: INPUT, entries: 0, policy: ACCEPT Bridge chain: FORWARD, entries: 1, policy: ACCEPT -s 8:0:27:aa:7:b2 -j DROP , pcnt = 5 -- bcnt = 420 Bridge chain: OUTPUT, entries: 0, policy: ACCEPT sjakhwal@rtxl3rld05:/etc/network$ sudo ebtables -L --Lc Bridge table: filter Bridge chain: INPUT, entries: 0, policy: ACCEPT Bridge chain: FORWARD, entries: 1, policy: ACCEPT -s 8:0:27:aa:7:b2 -j DROP , pcnt = 11 -- bcnt = 756 Bridge chain: OUTPUT, entries: 0, policy: ACCEPT sjakhwal@rtxl3rld05:/etc/network$

- INPUT chain: Input chain applied to incoming traffic to local host. In our case bridge (192.178.10.100) is local host

- Add MAC filter rule so traffic from VM1 to bridge dropped. run ping from VM1 to bridge IP, make sure ping dropped

sjakhwal@rtxl3rld05:/etc/network$ sudo ebtables -A INPUT -s 8:0:27:aa:7:b2 -j DROP sjakhwal@rtxl3rld05:/etc/network$ sudo ebtables -L Bridge table: filter Bridge chain: INPUT, entries: 1, policy: ACCEPT -s 8:0:27:aa:7:b2 -j DROP Bridge chain: FORWARD, entries: 0, policy: ACCEPT Bridge chain: OUTPUT, entries: 0, policy: ACCEPT sjakhwal@rtxl3rld05:/etc/network$ sudo ebtables -L --Lc Bridge table: filter Bridge chain: INPUT, entries: 1, policy: ACCEPT -s 8:0:27:aa:7:b2 -j DROP , pcnt = 11 -- bcnt = 418 Bridge chain: FORWARD, entries: 0, policy: ACCEPT Bridge chain: OUTPUT, entries: 0, policy: ACCEPT sjakhwal@rtxl3rld05:/etc/network$ sudo ebtables -L --Lc Bridge table: filter Bridge chain: INPUT, entries: 1, policy: ACCEPT -s 8:0:27:aa:7:b2 -j DROP , pcnt = 14 -- bcnt = 502 Bridge chain: FORWARD, entries: 0, policy: ACCEPT Bridge chain: OUTPUT, entries: 0, policy: ACCEPT sjakhwal@rtxl3rld05:/etc/network$ sudo ebtables -L --Lc Bridge table: filter Bridge chain: INPUT, entries: 1, policy: ACCEPT -s 8:0:27:aa:7:b2 -j DROP , pcnt = 17 -- bcnt = 586 Bridge chain: FORWARD, entries: 0, policy: ACCEPT Bridge chain: OUTPUT, entries: 0, policy: ACCEPT sjakhwal@rtxl3rld05:/etc/network$

- OUTPUT chain: Output chain applied to outgoing traffic from local host. In our case bridge (192.168.10.100) is the local host

- Add MAC filter rule so traffic from bridge to VM1 dropped, –Lc option gives the count when rule get hit

sjakhwal@rtxl3rld05:~$ sudo ebtables -A OUTPUT -d 8:0:27:aa:7:b2 -j DROP sjakhwal@rtxl3rld05:~$ sudo ebtables -L Bridge table: filter Bridge chain: INPUT, entries: 0, policy: ACCEPT Bridge chain: FORWARD, entries: 0, policy: ACCEPT Bridge chain: OUTPUT, entries: 1, policy: ACCEPT -d 8:0:27:aa:7:b2 -j DROP sjakhwal@rtxl3rld05:~$ ping 192.168.10.1 PING 192.168.10.1 (192.168.10.1) 56(84) bytes of data. ^C --- 192.168.10.1 ping statistics --- 4 packets transmitted, 0 received, 100% packet loss, time 3024ms sjakhwal@rtxl3rld05:~$ sudo ebtables -L --Lc Bridge table: filter Bridge chain: INPUT, entries: 0, policy: ACCEPT Bridge chain: FORWARD, entries: 0, policy: ACCEPT Bridge chain: OUTPUT, entries: 1, policy: ACCEPT -d 8:0:27:aa:7:b2 -j DROP , pcnt = 7 -- bcnt = 420

You can delete bridge using these commands

sjakhwal@rtxl3rld05:~$ brctl show bridge name bridge id STP enabled interfaces testBr 8000.000acd27b824 no eth3 tap0 tap1 virbr0 8000.000000000000 yes #edit bridge to down state sjakhwal@rtxl3rld05:~$ sudo ip link set testBr down [sudo] password for sjakhwal: sjakhwal@rtxl3rld05:~$ sudo brctl delbr testBr sjakhwal@rtxl3rld05:~$ sudo brctl show bridge name bridge id STP enabled interfaces virbr0 8000.000000000000 yes sjakhwal@rtxl3rld05:~$

References: