The goal of this lab is to learn Ansible basics and explore how it can help automate network device.

Ansible is a configuration management tool for servers. It is in the same line of tools as Chef, Puppet and Salt. The difference is, Ansible is agentless which mean it does not require any agent running on the server you are trying to configure. Which is a huge plus because network devices like switches and routers can’t be loaded with any agent

Ansible does it’s job by executing modules. Modules are python libraries. Ansible has a wide range of module . Click here to lean about Ansible modules.

Ansible is basically made of these two components

- Modules: programs to perform a task

- Inventory file: This file contains remote server info like IP address, ssh connection info

When you install Ansible modules are loaded as part of installation. In my environment modules are located in this directory

/usr/lib/python2.7/site-packages/ansible/modules

To check what modules installed in your machine try this

$ansible-doc -l

To check the detail of a module try this

$ansible-doc -s $ansible-doc -s file

Prerequisite:

Install Ansible

$sudo pip install ansible

I am using Centos 7.3 for this lab. This is my Ansible version

$ansible --version

ansible 2.2.1.0

config file = /etc/ansible/ansible.cfg

configured module search path = Default w/o overrides

Topology:

I have two bare metal servers. Ansible is installed on one server (Ansible server) and another server (remote server) used for configuration

Procedure:

Setup passwordless ssh

Let’s setup passwordless access to remote server so we don’t have to type password every time Ansible executed. Ansible prefer to login using ssh keys. To setup passwordless access try these commands on Ansible server and remote server.

In this example I am using username:virtuora and password:virtuora on remote server

1. Generate ssh-key on Ansible server as well as on remote server for a user.

$ssh-keygen -t rsa

2. Now copy remote server public ssh-key to Ansible server and Ansible server

public key to remote server

$ssh-copy-id -i ~/.ssh/id_rsa.pub virtuora@192.254.211.168

3. Test it out by ssh to remote server from Ansible server and make sure

ssh works passwordless

$ssh virtuora@192.254.211.168

Inventory File

Inventory file contains remote server reachability info (IP address, ssh user, port number etc). It is a simple text file with remote server IP address and optionally can contain ssh info. I named my inventory file ‘hosts’ . I have only one remote server (vnc-server) to configure

[vnc-server]

192.254.211.168 ansible_connection=ssh ansible_port=22 ansible_user=virtuora

If you have multiple remote servers you can group them like this

[db-servers]

192.254.211.166

192.254.211.167

[web-server]

192.254.211.165

There are two ways to execute Ansible

- Adhoc, which is Ansible command line

- Ansible playbook, which is essentially yaml with Jinja2 template

Ansible with adhoc

This is the simplest Ansible adhoc command. It is using localhost so inventory file is not needed

$ansible all -i "localhost," -c local -m shell -a 'echo hello world'

localhost | SUCCESS | rc=0 >>

hello world

Try below adhoc command with ping module. This is not a traditional ICMP ping. If successful it mean Ansible server can login to remote server and remote server has usable python configured

$ansible -i hosts -m ping vnc-server

-i: specify the inventory file, in this case ‘hosts’

-m: Ansible module name, in this case ping

vnc-server: This is the remote server name in inventory file

$ansible -i hosts -m ping vnc-server

192.254.211.168 | SUCCESS => {

"changed": false,

"ping": "pong"

}

Now try same command with increase verbosity -vvv flag, it will help us understand Ansible internal. If you study log you will find that Ansible first sftp the ping module from Ansible server to remote server, executes the module on remote server and then deletes it

Note: In a sense Ansible is not completely agent less. It requires remote server to support sftp and python to execute module, this could be a problem for network devices (switches & routers) which doesn’t have these capabilities

$ansible -i hosts -m ping vnc-server -vvv

Using /etc/ansible/ansible.cfg as config file

Using module file /usr/lib/python2.7/site-packages/ansible/modules/core/system/ping.py

ESTABLISH SSH CONNECTION FOR USER: virtuora

SSH: EXEC ssh -C -o ControlMaster=auto -o ControlPersist=60s -o Port=22 -o KbdInteractiveAuthentication=no -o PreferredAuthentications=gssapi-with-mic,gssapi-keyex,hostbased,publickey -o PasswordAuthentication=no -o User=virtuora -o ConnectTimeout=10 -o ControlPath=/home/divine/.ansible/cp/ansible-ssh-%h-%p-%r 192.254.211.168 '/bin/sh -c '"'"'( umask 77 && mkdir -p "` echo ~/.ansible/tmp/ansible-tmp-1491254258.92-242743076706801 `" && echo ansible-tmp-1491254258.92-242743076706801="` echo ~/.ansible/tmp/ansible-tmp-1491254258.92-242743076706801 `" ) && sleep 0'"'"''

PUT /tmp/tmpXP3MNY TO /home/virtuora/.ansible/tmp/ansible-tmp-1491254258.92-242743076706801/ping.py

SSH: EXEC sftp -b - -C -o ControlMaster=auto -o ControlPersist=60s -o Port=22 -o KbdInteractiveAuthentication=no -o PreferredAuthentications=gssapi-with-mic,gssapi-keyex,hostbased,publickey -o PasswordAuthentication=no -o User=virtuora -o ConnectTimeout=10 -o ControlPath=/home/divine/.ansible/cp/ansible-ssh-%h-%p-%r '[192.254.211.168]'

ESTABLISH SSH CONNECTION FOR USER: virtuora

SSH: EXEC ssh -C -o ControlMaster=auto -o ControlPersist=60s -o Port=22 -o KbdInteractiveAuthentication=no -o PreferredAuthentications=gssapi-with-mic,gssapi-keyex,hostbased,publickey -o PasswordAuthentication=no -o User=virtuora -o ConnectTimeout=10 -o ControlPath=/home/divine/.ansible/cp/ansible-ssh-%h-%p-%r 192.254.211.168 '/bin/sh -c '"'"'chmod u+x /home/virtuora/.ansible/tmp/ansible-tmp-1491254258.92-242743076706801/ /home/virtuora/.ansible/tmp/ansible-tmp-1491254258.92-242743076706801/ping.py && sleep 0'"'"''

ESTABLISH SSH CONNECTION FOR USER: virtuora

SSH: EXEC ssh -C -o ControlMaster=auto -o ControlPersist=60s -o Port=22 -o KbdInteractiveAuthentication=no -o PreferredAuthentications=gssapi-with-mic,gssapi-keyex,hostbased,publickey -o PasswordAuthentication=no -o User=virtuora -o ConnectTimeout=10 -o ControlPath=/home/divine/.ansible/cp/ansible-ssh-%h-%p-%r -tt 192.254.211.168 '/bin/sh -c '"'"'/usr/bin/python /home/virtuora/.ansible/tmp/ansible-tmp-1491254258.92-242743076706801/ping.py; rm -rf "/home/virtuora/.ansible/tmp/ansible-tmp-1491254258.92-242743076706801/" > /dev/null 2>&1 && sleep 0'"'"''

192.254.211.168 | SUCCESS => {

"changed": false,

"invocation": {

"module_args": {

"data": null

},

"module_name": "ping"

},

"ping": "pong"

}

There are some variations to adhoc command, say if you want to specify user on command line instead of adding in inventory file (as I did) you can try this

In this case I specify user using -u option

$ansible -i hosts -m ping vnc-server -u virtuora

167.254.211.168 | SUCCESS => {

"changed": false,

"ping": "pong"

Privilege Escalation

Privilege escalation allows to become another user that we login with. It can be useful when we need to become sudo for some commands.

Say if you want to install a package on remote server which require sudo access. You can run it this way:

$ansible -i hosts -m yum -a “name=bridge-utils state=present” vnc-server –become -K

–become: privilege escalation is true

-K: ask password for SUDO

$ansible -i hosts -m yum -a "name=bridge-utils state=present" vnc-server --become -K

SUDO password:

167.254.211.168 | SUCCESS => {

"changed": false,

"msg": "",

"rc": 0,

"results": [

"bridge-utils-1.5-9.el7.x86_64 providing bridge-utils is already installed"

]

}

Ansible Playbook

Running Ansible on command line is not very efficient, playbook allows you to run multiple tasks at once. You can share playbook with other users . A playbook contains multiple tasks. Playbook is written in yaml format with jinja2 template. To learn basics of playbook click here

This is a simple playbook which installs git package on remote server using yum. In this playbook I am defining variable (vars) and privilege escalation using become:true and become_method:sudo

$ cat yum-playbook.yml

---

- hosts: vnc-server

vars:

package_name: git

tasks:

- name: Install git package

yum:

state: present

name: "{{ package_name }}"

become: true

become_method: sudo

hosts: remote server name on inventory file

vars: variable definition

become: privilege escalation

become_method: privilege escalation method

Execute playbook with -K to prompt for sudo password

$ansible-playbook -i hosts yum-playbook.yml -K

SUDO password:

PLAY [vnc-server] **************************************************************

TASK [setup] *******************************************************************

ok: [192.254.211.168]

TASK [Install git package] *****************************************************

changed: [192.254.211.168]

PLAY RECAP *********************************************************************

192.254.211.168 : ok=2 changed=1 unreachable=0 failed=0

changed=1 mean one change applied to remote server, in this case git package installed

Run it again

$ansible-playbook -i hosts yum-playbook.yml -K

SUDO password:

PLAY [vnc-server] **************************************************************

TASK [setup] *******************************************************************

ok: [192.254.211.168]

TASK [Install git package] *****************************************************

ok: [192.254.211.168]

PLAY RECAP *********************************************************************

192.254.211.168 : ok=2 changed=0 unreachable=0 failed=0

This time change=0 because git package was already present so no action performed on remote server. This is Ansible idempotent behavior, which mean it performs action only when needed.

Configure Network device using Ansible

Configuring servers and all is good but I am interested in configuring network devices like switches, routers. I like to know what Ansible can do for these devices. In my case I have an optical network switch which doesn’t support python or sftp. It does support ssh.

As I mentioned earlier Ansible modules need sftp and python configured on remote server. Let’s see how to configure an optical switch using these constraint.

Ansible has a module called ‘raw’, read more about it here. This is the only module I found which doesn’t require sftp and python on remote server. This module sends commands on open ssh connection it doesn’t copy module to remote server.

This is my playbook with raw module looks like. This playbook provisions ports in my optical switch.

This playbook has two tasks 1) configure ports 2) set fail when port configuration task fail

In this playbook I have defined a dictionary of shelf/slot/port, configure ports task iterate through this dictionary to configure ports. I am also using ‘register’ to register output of configure ports task, this will be used in next task to set ‘failed’ field

gather_facts: false is important here so Ansible doesn’t try to gather facts from our network device as it does on server. This parameter is true by default.

---

- hosts: optical-switch

remote_user: virtuora

gather_facts: false

vars:

ports:

P1:

slot_no: 2

port_no: 7

port_type: 10GER

P2:

slot_no: 2

port_no: 8

port_type: 10GER

P3:

slot_no: 2

port_no: 9

port_type: 10GER

shelf_no: 1

subslot_no: 0

tasks:

- name: configure ports

raw: |

configure

set eqpt shelf "{{ shelf_no }}" slot {{ item.value.slot_no }} subslot "{{ subslot_no }}" port {{ item.value.port_no }} pluggableInterfaceType {{ item.value.port_type }} admin-status up

commit

register: port_config

with_dict: "{{ ports }}"

- name: Set fail when port configuration fail

fail:

msg: "Configure ports failed"

when: item.stdout.find('error') != -1

with_items: "{{ port_config.results }}"

#debug: msg="{{ port_config }}"

Execute the playbook. I don’t have password less ssh to device so -k used to provide password during run time

$ ansible-playbook -i hosts playbook-s100.yml -k

SSH password:

PLAY [s100-1] ******************************************************************

TASK [configure ports] *********************************************************

changed: [192.254.210.33] => (item={'key': u'P2', 'value': {u'slot_no': 2, u'port_no': 8, u'port_type': u'10GER'}})

changed: [192.254.210.33] => (item={'key': u'P3', 'value': {u'slot_no': 2, u'port_no': 9, u'port_type': u'10GER'}})

changed: [192.254.210.33] => (item={'key': u'P1', 'value': {u'slot_no': 2, u'port_no': 7, u'port_type': u'10GER'}})

TASK [Set pass/fail] ***********************************************************

skipping: [192.254.210.33] => (item={u'changed': True, u'_ansible_no_log': False, u'stdout': u'Commit complete.\r\n', u'_ansible_item_result': True, u'item': {u'key': u'P2', u'value': {u'slot_no': 2, u'port_no': 8, u'port_type': u'10GER'}}, u'stderr': u'\nWelcome to the FUJITSU 1FINITY S100\nCopyright Fujitsu Limited.\n\nShared connection to 192.254.210.33 closed.\r\n', u'rc': 0, u'invocation': {u'module_name': u'raw', u'module_args': {u'_raw_params': u'configure\n set eqpt shelf "1" slot 2 subslot "0" port 8 pluggableInterfaceType 10GER admin-status up\n commit'}}, u'stdout_lines': [u'Commit complete.']})

skipping: [192.254.210.33] => (item={u'changed': True, u'_ansible_no_log': False, u'stdout': u'Commit complete.\r\n', u'_ansible_item_result': True, u'item': {u'key': u'P3', u'value': {u'slot_no': 2, u'port_no': 9, u'port_type': u'10GER'}}, u'stderr': u'Shared connection to 192.254.210.33 closed.\r\n', u'rc': 0, u'invocation': {u'module_name': u'raw', u'module_args': {u'_raw_params': u'configure\n set eqpt shelf "1" slot 2 subslot "0" port 9 pluggableInterfaceType 10GER admin-status up\n commit'}}, u'stdout_lines': [u'Commit complete.']})

skipping: [192.254.210.33] => (item={u'changed': True, u'_ansible_no_log': False, u'stdout': u'Commit complete.\r\n', u'_ansible_item_result': True, u'item': {u'key': u'P1', u'value': {u'slot_no': 2, u'port_no': 7, u'port_type': u'10GER'}}, u'stderr': u'Shared connection to 192.254.210.33 closed.\r\n', u'rc': 0, u'invocation': {u'module_name': u'raw', u'module_args': {u'_raw_params': u'configure\n set eqpt shelf "1" slot 2 subslot "0" port 7 pluggableInterfaceType 10GER admin-status up\n commit'}}, u'stdout_lines': [u'Commit complete.']})

PLAY RECAP *********************************************************************

192.254.210.33 : ok=1 changed=1 unreachable=0 failed=0

Playbook ran fine and configured three ports on optical switch.

Execute playbook again

$ ansible-playbook -i hosts playbook-s100.yml -k

SSH password:

PLAY [s100-1] ******************************************************************

TASK [configure ports] *********************************************************

changed: [192.254.210.33] => (item={'key': u'P2', 'value': {u'slot_no': 2, u'port_no': 8, u'port_type': u'10GER'}})

changed: [192.254.210.33] => (item={'key': u'P3', 'value': {u'slot_no': 2, u'port_no': 9, u'port_type': u'10GER'}})

changed: [192.254.210.33] => (item={'key': u'P1', 'value': {u'slot_no': 2, u'port_no': 7, u'port_type': u'10GER'}})

TASK [Set pass/fail] ***********************************************************

failed: [192.254.210.33] (item={u'changed': True, u'_ansible_no_log': False, u'stdout': u'Error: access denied\r\n[error][2017-04-10 17:04:47]\r\n', u'_ansible_item_result': True, u'item': {u'key': u'P2', u'value': {u'slot_no': 2, u'port_no': 8, u'port_type': u'10GER'}}, u'stderr': u'Shared connection to 192.254.210.33 closed.\r\n', u'rc': 0, u'invocation': {u'module_name': u'raw', u'module_args': {u'_raw_params': u'configure\n set eqpt shelf "1" slot 2 subslot "0" port 8 pluggableInterfaceType 10GER admin-status up\n commit'}}, u'stdout_lines': [u'Error: access denied', u'[error][2017-04-10 17:04:47]']}) => {"failed": true, "item": {"changed": true, "invocation": {"module_args": {"_raw_params": "configure\n set eqpt shelf \"1\" slot 2 subslot \"0\" port 8 pluggableInterfaceType 10GER admin-status up\n commit"}, "module_name": "raw"}, "item": {"key": "P2", "value": {"port_no": 8, "port_type": "10GER", "slot_no": 2}}, "rc": 0, "stderr": "Shared connection to 192.254.210.33 closed.\r\n", "stdout": "Error: access denied\r\n[error][2017-04-10 17:04:47]\r\n", "stdout_lines": ["Error: access denied", "[error][2017-04-10 17:04:47]"]}, "msg": "The command failed"}

failed: [192.254.210.33] (item={u'changed': True, u'_ansible_no_log': False, u'stdout': u'Error: access denied\r\n[error][2017-04-10 17:04:47]\r\n', u'_ansible_item_result': True, u'item': {u'key': u'P3', u'value': {u'slot_no': 2, u'port_no': 9, u'port_type': u'10GER'}}, u'stderr': u'Shared connection to 192.254.210.33 closed.\r\n', u'rc': 0, u'invocation': {u'module_name': u'raw', u'module_args': {u'_raw_params': u'configure\n set eqpt shelf "1" slot 2 subslot "0" port 9 pluggableInterfaceType 10GER admin-status up\n commit'}}, u'stdout_lines': [u'Error: access denied', u'[error][2017-04-10 17:04:47]']}) => {"failed": true, "item": {"changed": true, "invocation": {"module_args": {"_raw_params": "configure\n set eqpt shelf \"1\" slot 2 subslot \"0\" port 9 pluggableInterfaceType 10GER admin-status up\n commit"}, "module_name": "raw"}, "item": {"key": "P3", "value": {"port_no": 9, "port_type": "10GER", "slot_no": 2}}, "rc": 0, "stderr": "Shared connection to 192.254.210.33 closed.\r\n", "stdout": "Error: access denied\r\n[error][2017-04-10 17:04:47]\r\n", "stdout_lines": ["Error: access denied", "[error][2017-04-10 17:04:47]"]}, "msg": "The command failed"}

failed: [192.254.210.33] (item={u'changed': True, u'_ansible_no_log': False, u'stdout': u'Error: access denied\r\n[error][2017-04-10 17:04:48]\r\n', u'_ansible_item_result': True, u'item': {u'key': u'P1', u'value': {u'slot_no': 2, u'port_no': 7, u'port_type': u'10GER'}}, u'stderr': u'Shared connection to 192.254.210.33 closed.\r\n', u'rc': 0, u'invocation': {u'module_name': u'raw', u'module_args': {u'_raw_params': u'configure\n set eqpt shelf "1" slot 2 subslot "0" port 7 pluggableInterfaceType 10GER admin-status up\n commit'}}, u'stdout_lines': [u'Error: access denied', u'[error][2017-04-10 17:04:48]']}) => {"failed": true, "item": {"changed": true, "invocation": {"module_args": {"_raw_params": "configure\n set eqpt shelf \"1\" slot 2 subslot \"0\" port 7 pluggableInterfaceType 10GER admin-status up\n commit"}, "module_name": "raw"}, "item": {"key": "P1", "value": {"port_no": 7, "port_type": "10GER", "slot_no": 2}}, "rc": 0, "stderr": "Shared connection to 192.254.210.33 closed.\r\n", "stdout": "Error: access denied\r\n[error][2017-04-10 17:04:48]\r\n", "stdout_lines": ["Error: access denied", "[error][2017-04-10 17:04:48]"]}, "msg": "The command failed"}

to retry, use: --limit @/home/divine/ansible/vnc_install/playbook-s100.retry

PLAY RECAP *********************************************************************

192.254.210.33 : ok=1 changed=1 unreachable=0 failed=1

As you can see failed=1 because port configuration denied due to existing provisionig

I didn’t find other Ansible modules which can help to configure my device. However if you are using Juniper or Cisco you are at luck they have written special Ansible modules for their devices

Ansible has support for many situations. Here is what I learned

What if you need to prompt user before running playbook tasks and exit if confirmation fail

- hosts: vnc-server

vars_prompt:

name: "confir"

prompt: "Are you sure you want to un-install VNC, answer with 'yes'"

default: "no"

private: no

tasks:

- name: Check Confirmation

fail: msg="confirmation failed"

when: confirm != "yes"

How about if you need to execute a task based on output of previous task. In this example I am checking the status of ‘vnc’ application and if it is not running then only start it in second task

- name: Check VNC status

command: vnc status

register: vnc_status

- name: start vnc if not already running

shell: nohup vnc start

when: item.find('DOWN')

with_items: "{{ vnc_status.stdout }}"

If your application doesn’t have way to check status, you can check status using Linux ‘ps’ command. Write a simple shell command like this to check application status

- name: check vnc status

shell: if ps -ef | egrep 'karaf' | grep -v grep > /dev/null; then echo "vnc_running"; else echo "vnc_not_running"; fi

register: vnc_status

- name: start vnc if not already running

shell: nohup vnc start

when: vnc_status.stdout.find('vnc_not_running') == 0

Ansible gather facts from remote server. You can use these facts in your playbook. In this example I am using ‘ansible_pkg_mgr’ which will be either yum or apt-get depending on your Linux version

- name: Install git package

yum:

state: present

name: git

when: ansible_pkg_mgr == "yum"

become: true

become_method: sudo

You can check facts gathered by Ansibile by running this command

ansible -i hosts -m setup vnc-server

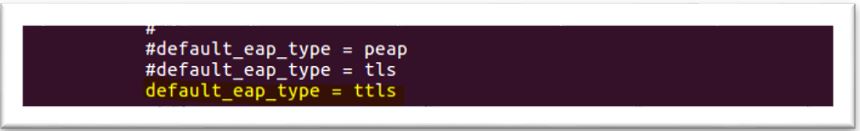

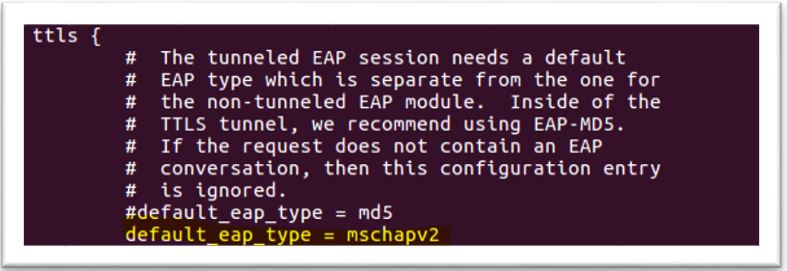

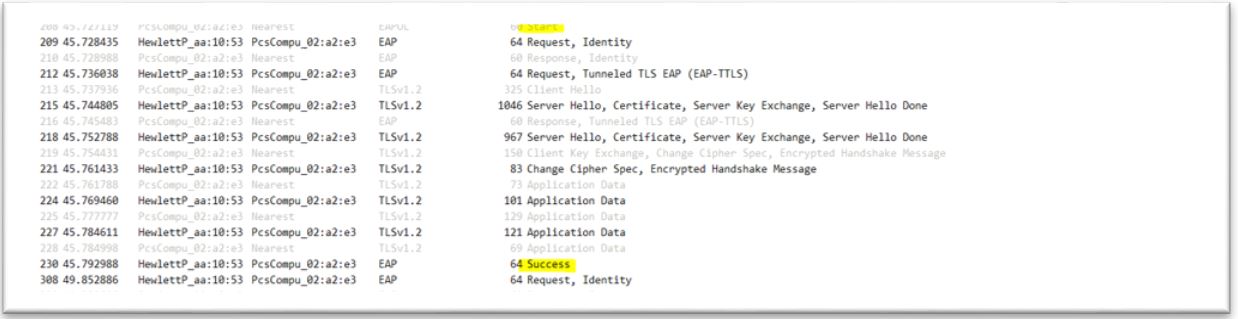

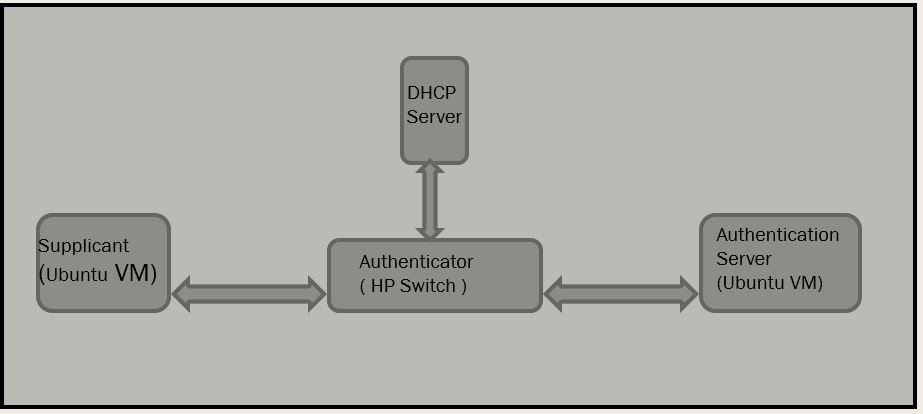

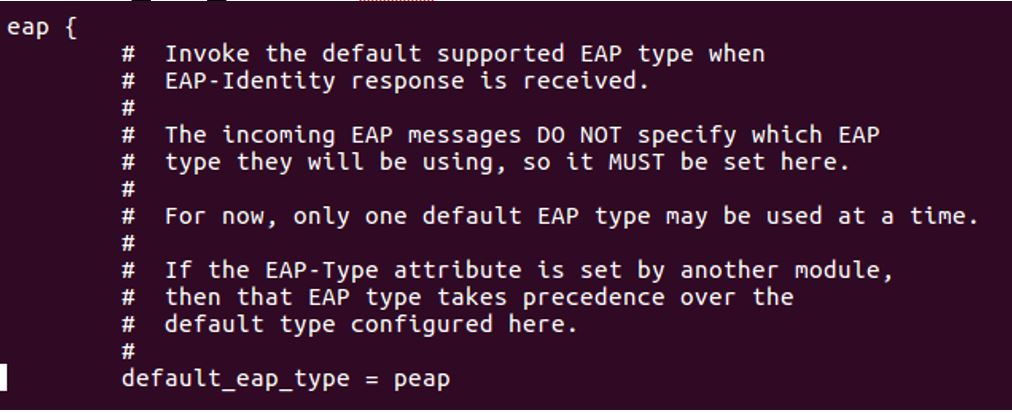

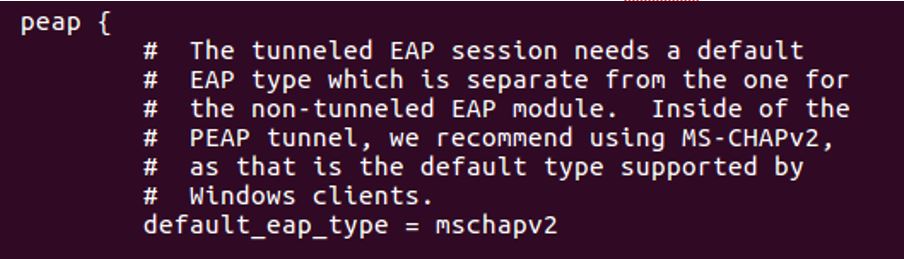

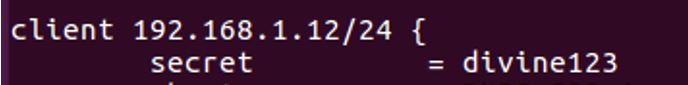

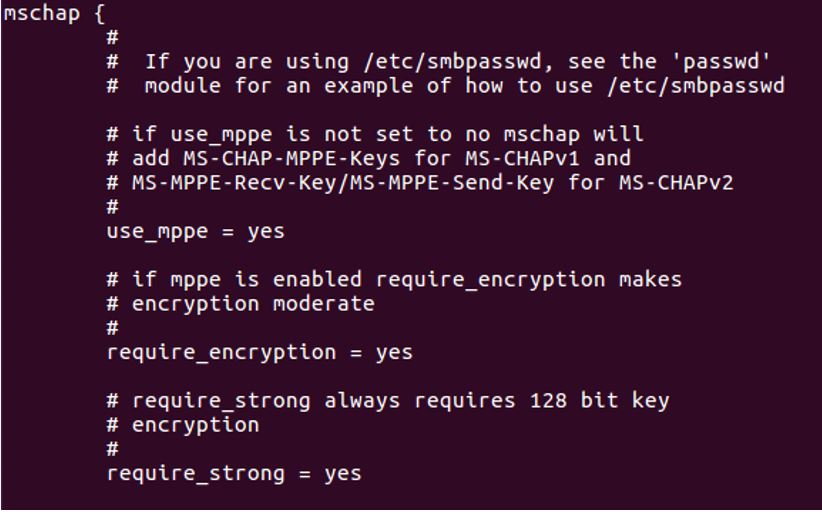

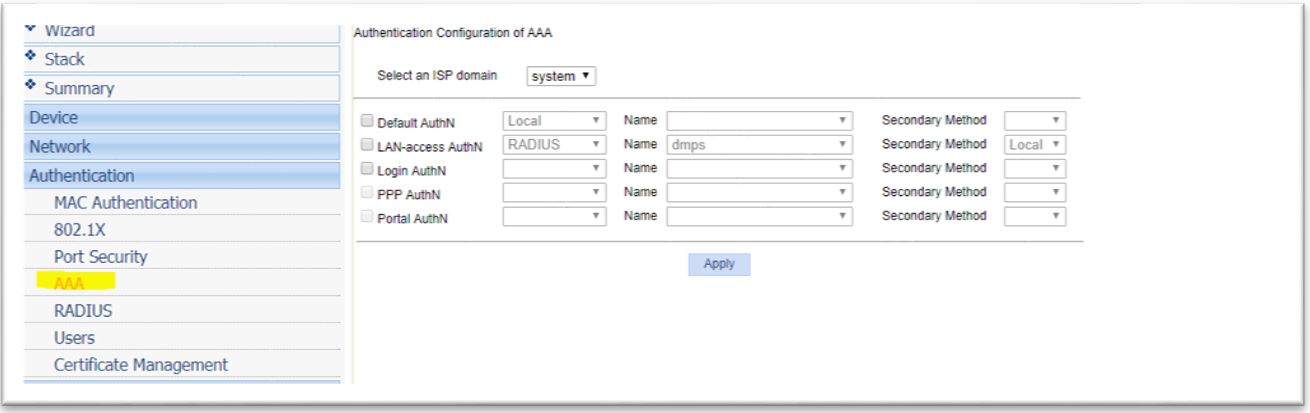

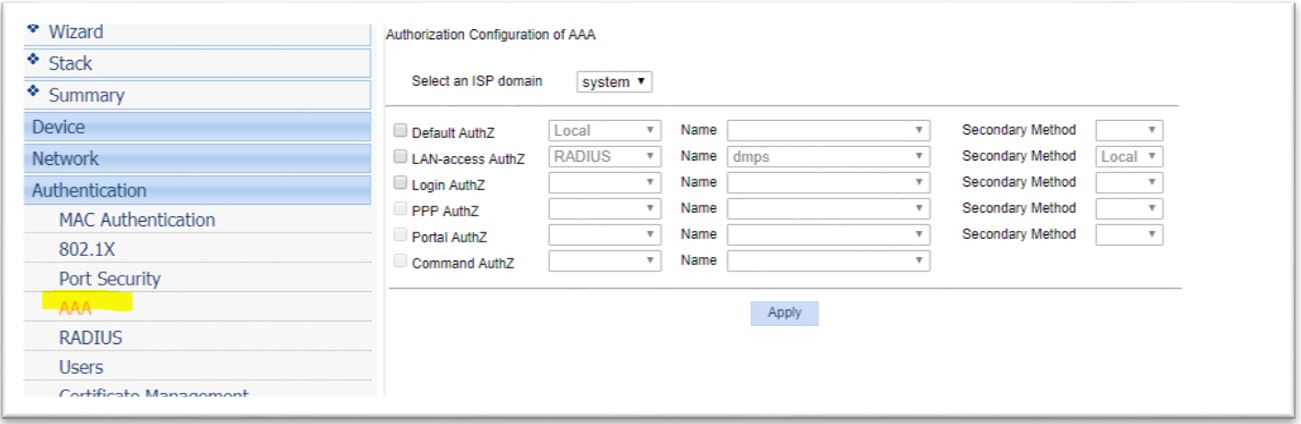

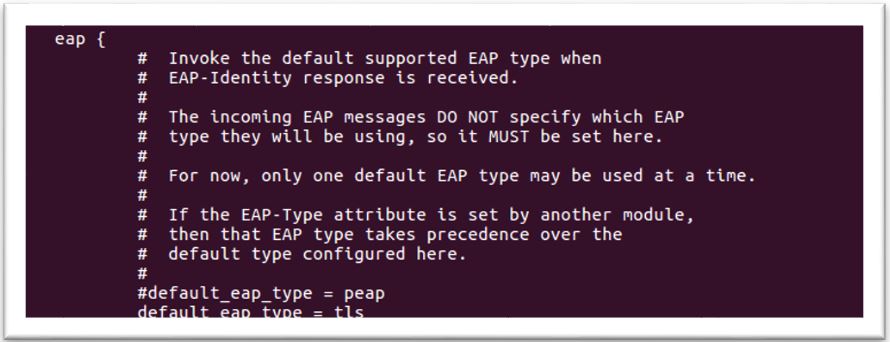

4. Configure mschap under /etc/freeradius/modules/mschap

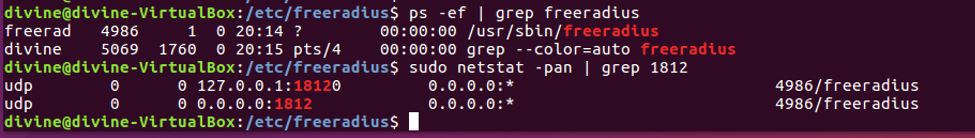

4. Configure mschap under /etc/freeradius/modules/mschap 5. Start freeradius server. $sudo service freeradius restart. Make sure server is running and listening on port 1812

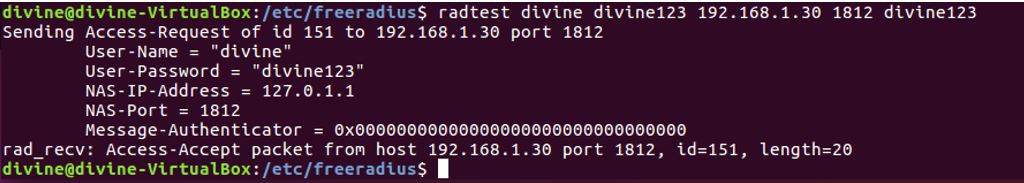

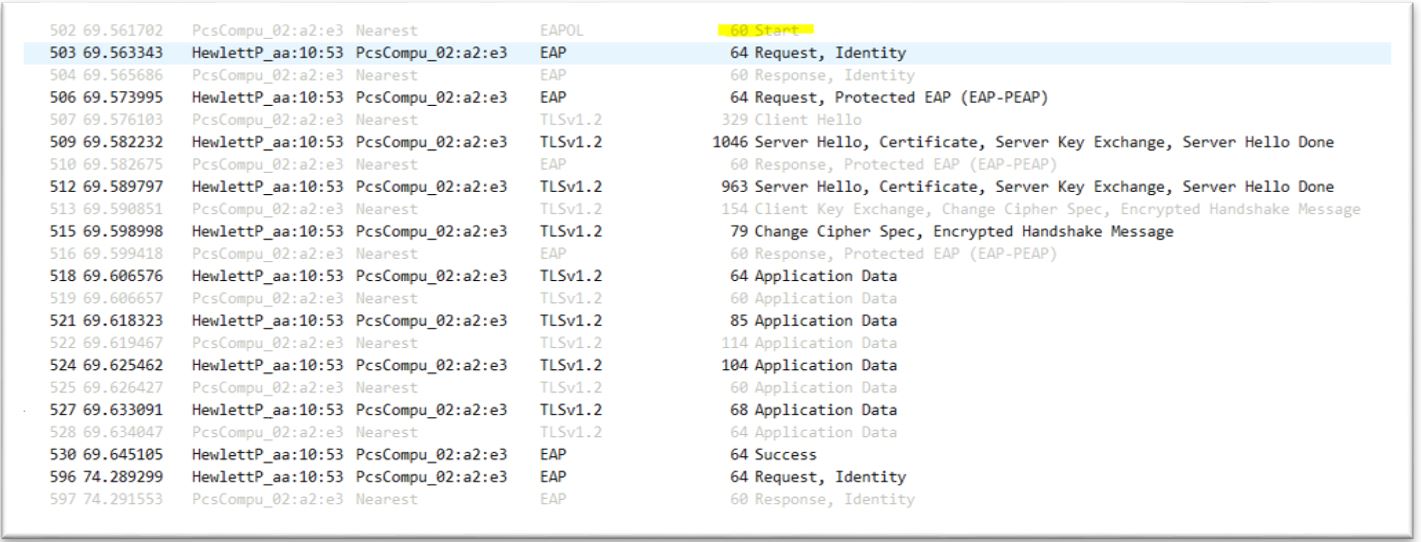

5. Start freeradius server. $sudo service freeradius restart. Make sure server is running and listening on port 1812 6. Now the server is running let’s test it to make sure it can handle incoming request. If you receive Access-Accept it means configuration is good. Try this command,

6. Now the server is running let’s test it to make sure it can handle incoming request. If you receive Access-Accept it means configuration is good. Try this command,

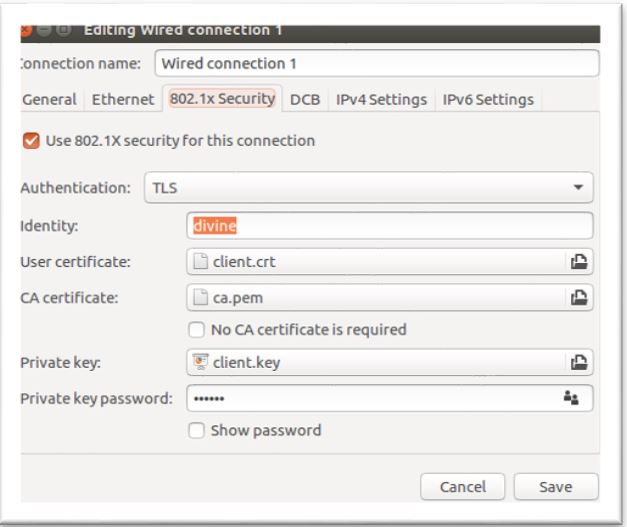

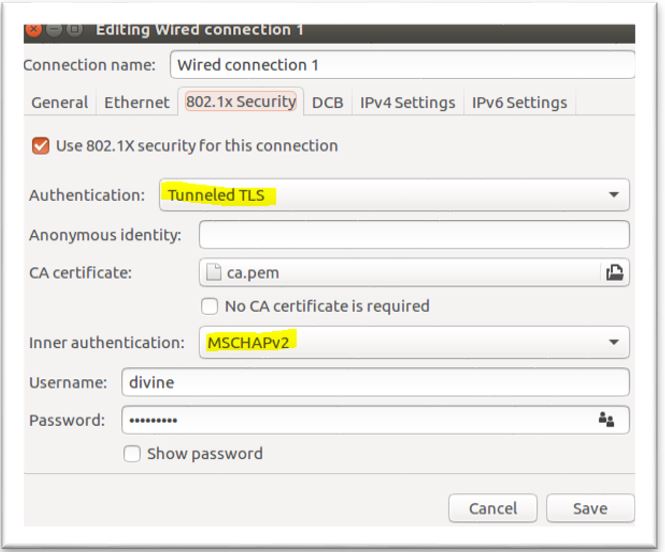

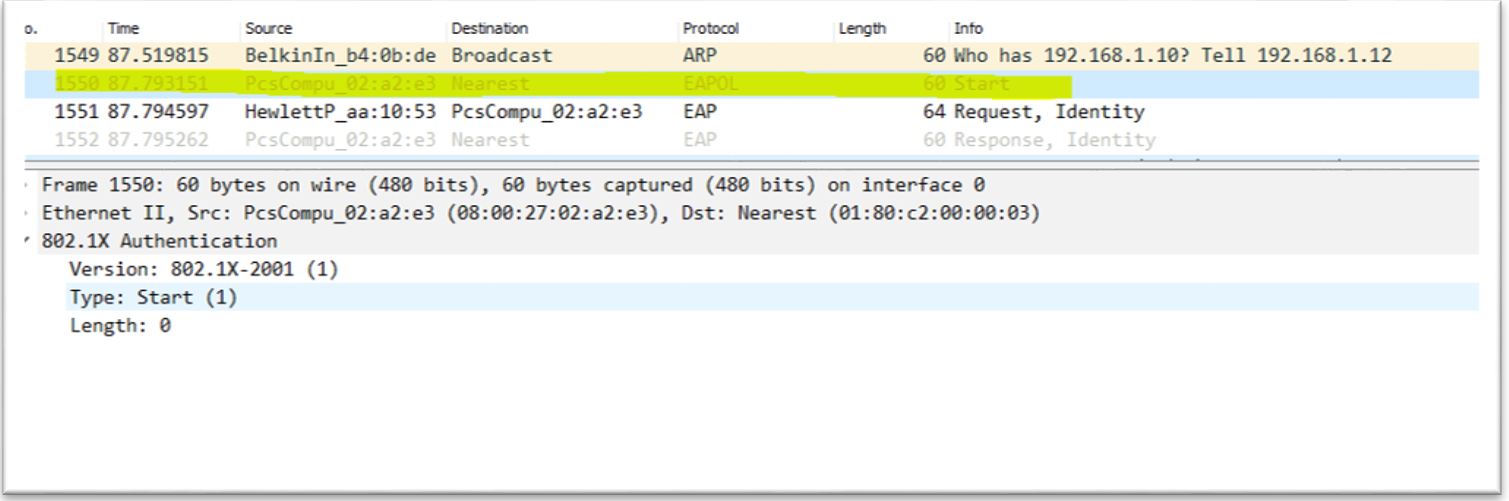

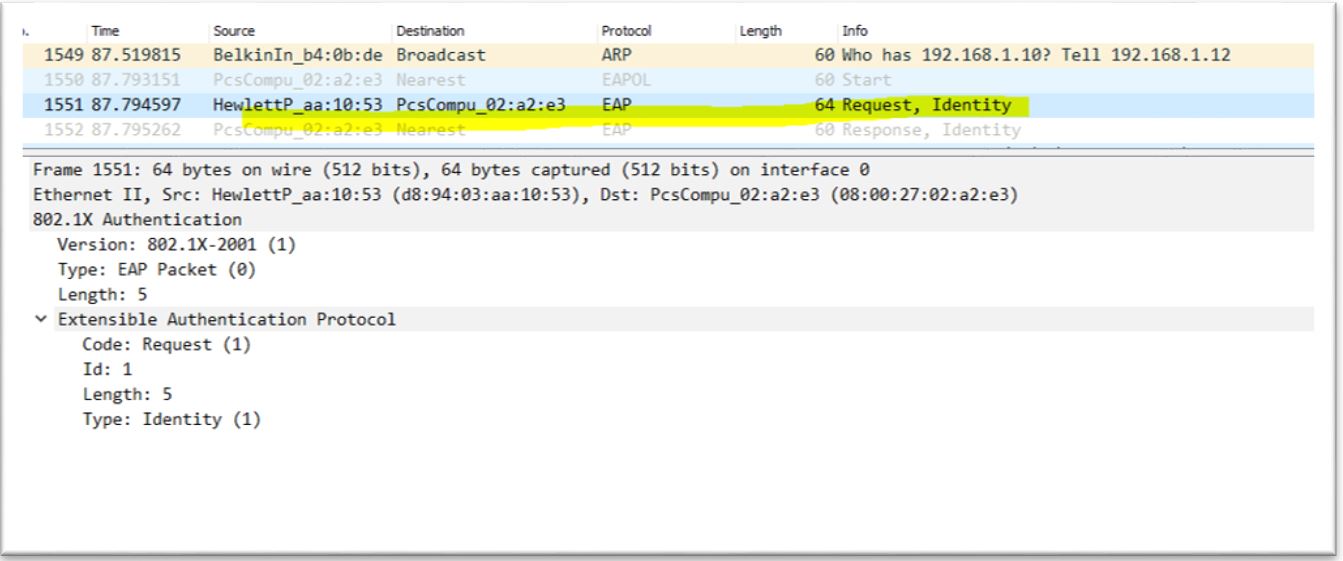

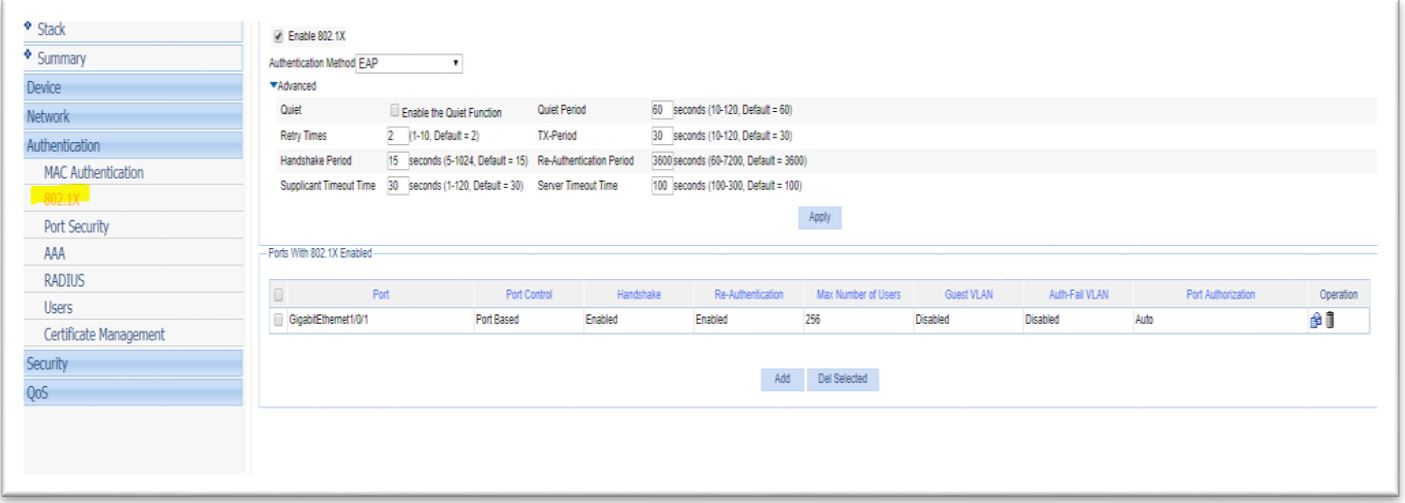

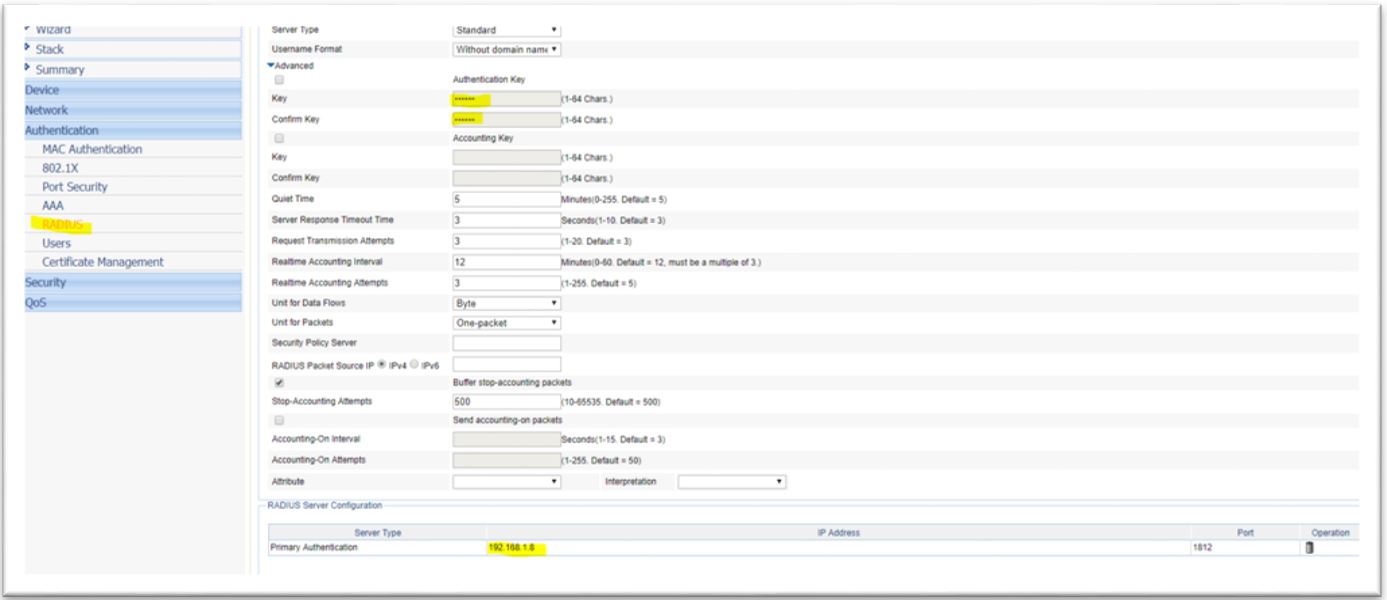

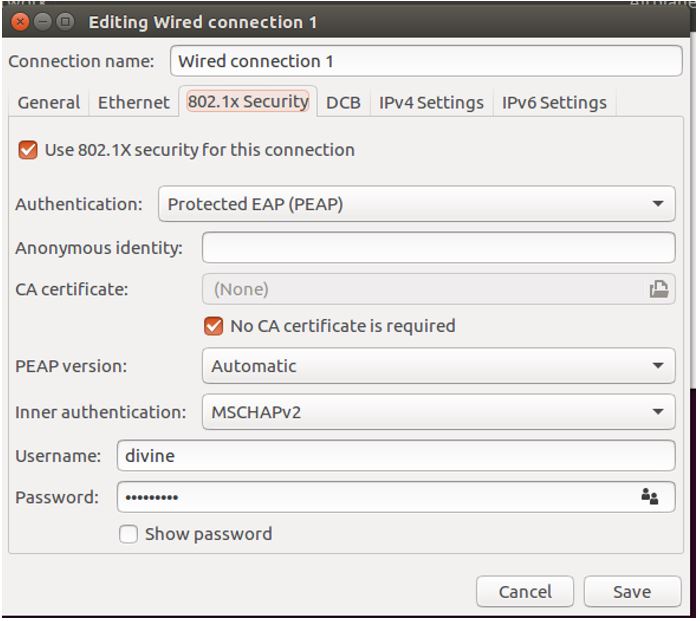

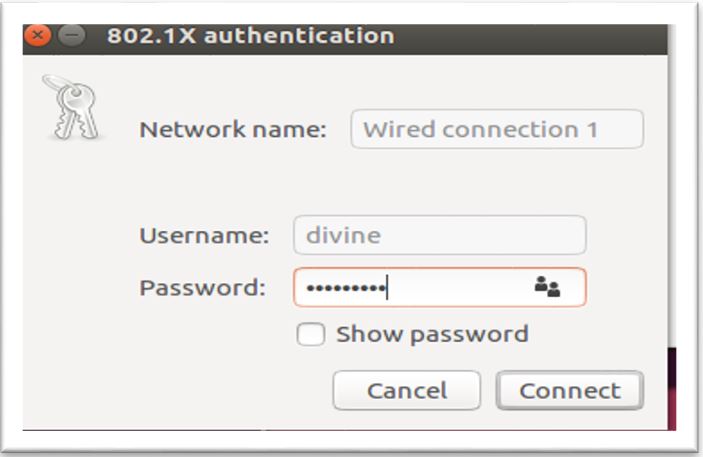

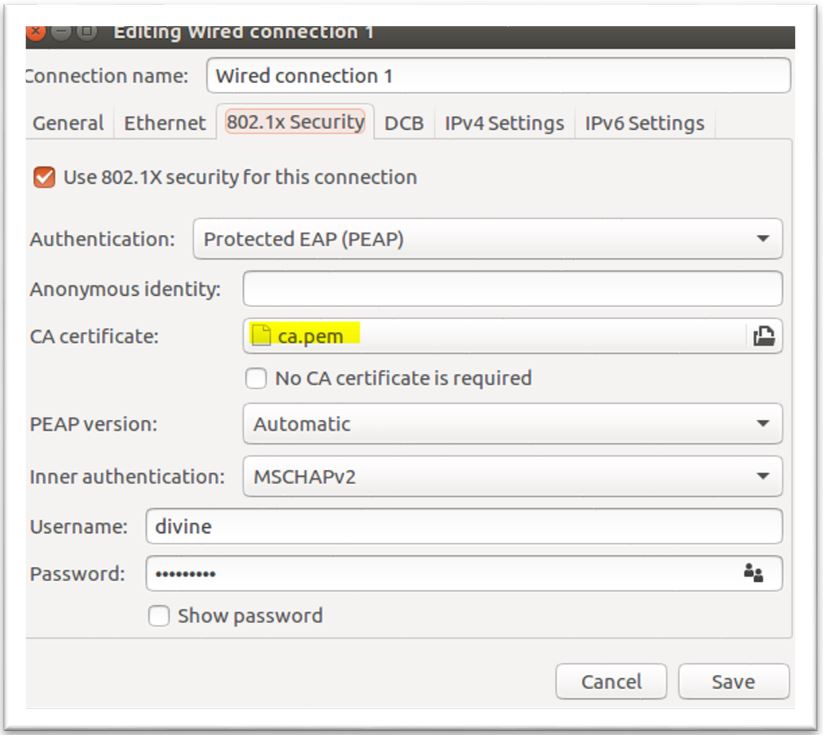

3. Configure Supplicant

3. Configure Supplicant