Puppet like ansible, chef and salt is a configuration management tool. Say you are a system admin and your job is to manage company servers. You install new packages, add users, delete users and various other tasks. Puppet allows you to automate all these tasks and make life easier.

You can execute puppet in a standalone mode or client server mode. In the client server mode you need to install puppet agent on a machine that needs to be managed and server on another. A single server can manage multiple agents.

Puppet works as pull request where agents pull their configuration data from server. This can be achieved on demand by running ‘puppet agent –test’ command on client machine or periodically. Every 30 min client asks server for configuration data. As far I know there is no push operation from puppet server to client, it is always pull from client

Puppet programs works on desired state, it changes the state of resource from current state to desired state. If resource is already in desired state no action performed. You specify desired state in puppet program (called manifest). Say you want to install a new package on a client machine, you write a puppet program and specify desired state ‘ensure => ‘present”. When puppet client pulls this configuration data (called catalog) from server it checks for desired state for this particular package, if package already in desired state ‘present’ (package already installed), no action performed. If package is in different state then client will bring it to ‘present’ state (install the package). If you need to delete a package change desired state to ‘absent’ and client will remove the package

In this lab I will demonstrate how to install puppet in a client server configuration. I will show how to create a simple puppet script.

Its good idea to know commonly used puppet terminology before we start

Puppet master:

Machine which is running puppet server is called puppet master. Master has certification authority (CA) to generate and sign certificate. I use master and server interchangeably

Puppet agent:

Machine running puppet agent is call puppet agent or simply agent. I use agent and client interchangeably

Puppet manifest:

Puppet programs are called manifest, they have .pp extension. A manifest is made of modules

Catalog:

A document agents pulls from master that contains desired state of each resource. Any time you do ‘puppet agent –test’ it pulls catalog from server

Resource:

Resource is something that you manage on client machine. for example file & package are resources. Using file resource you can create/delete, update file on client

Pre-condition:

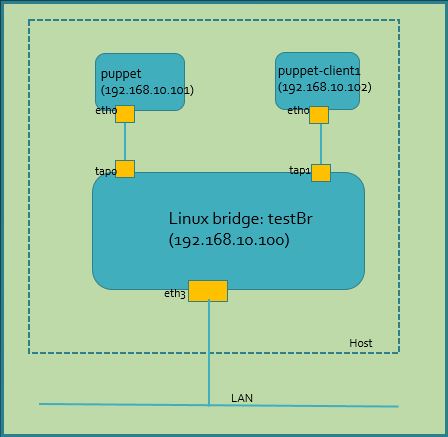

A Linux machine. I am using Ubuntu 14.04 LTS. I have created this topology with Linux bridgeI I have created three Ubuntu 14.04 VMs simulating server and agent. They are all in same subnet, I can ping from one VM to another VM thru bridge. Check out my lab-15 for Linux bridge setup. Note: If you have two machines you can create one as server and another as agent no need to follow lab-15

sjakhwal@rtxl3rld05:/etc/network$ sudo brctl show [sudo] password for sjakhwal: bridge name bridge id STP enabled interfaces testBr 8000.000acd27b824 no eth3 tap0 tap1 virbr0 8000.000000000000 yes

I have created two Ubuntu 14.04 VMs with hostname puppet & puppet-client1.

On VM with hostname puppet install pupperserver.

wget https://apt.puppetlabs.com/puppetlabs-release-pc1-trusty.deb sudo dpkg -i puppetlabs-release-pc1-trusty.debsudo apt-get install puppetserver labadmin@puppet:$ puppetserver --version puppetserver version: 2.3.1

On VMs with hostname puppet-client1 install puppet agent

wget https://apt.puppetlabs.com/puppetlabs-release-pc1-trusty.deb sudo dpkg -i puppetlabs-release-pc1-trusty.deb labadmin@puppet-client1:~$ puppet --version 4.4.2 labadmin@puppet-client1:~$

This completes installation part of puppet. Now let’s setup server to agent communication.

Puppet agents by default uses hostname ‘puppet’ to communicate with server. I have already setup server hostname as ‘puppet’ now we need to make sure it is resolved from agents. This can be done by updating /etc/hosts file

I have added this line in puppet-client1 /etc/hosts file. 192.168.10.101 is server IP address

192.168.10.101 puppet.sunny.net puppet

I have added these lines in server /etc/hosts file to resolve clients hostname

192.168.10.102 puppet-client1.sunny.net puppet-client1

Change domain name in /etc/resolv.conf file on all machines (agents & server). Note:You will lose this setting if machine rebooted a more permanent way is to add domain in interfaces file

search sunny.net

Try ping from agent and server machines using hostname , make sure ping passes

ping puppet ping puppet-client1.sunny.net

Start puppet server. Check puppet server is running and port 8140 is listening. Agent uses port 8140 to connect to master. Note:If your agent unable to connect to master make sure port 8140 is not blocked by firewall

Note:Make sure user is part of ‘puppet’ group

#start puppet server labadmin@puppet:/etc/puppet$sudo service puppetserver start labadmin@puppet:/etc/puppet$ ps -ef | grep puppet avahi 10079 1 0 11:28 ? 00:00:00 avahi-daemon: running [puppet.local] puppet 10577 1 0 11:46 ? 00:02:11 /usr/bin/java -XX:OnOutOfMemoryError=kill -9 %p -Djava.security.egd=/dev/urandom -Xms2g -Xmx2g -XX:MaxPermSize=256m -cp /opt/puppetlabs/server/apps/puppetserver/puppet-server-release.jar clojure.main -m puppetlabs.trapperkeeper.main --config /etc/puppetlabs/puppetserver/conf.d -b /etc/puppetlabs/puppetserver/bootstrap.cfg labadmin@puppet:$ puppetserver --version puppetserver version: 2.3.1 labadmin@puppet:$ sudo netstat -pan | grep 8140 [sudo] password for labadmin: tcp6 0 0 :::8140 :::* LISTEN 10577/java labadmin@puppet:$ #Use this command to open port 8140 on master if you think port is blocked $iptables -I INPUT -m state --state NEW -m tcp -p tcp --dport 8140 -j ACCEPT

Start puppet agent

labadmin@puppet-client1:~$ which puppet /opt/puppetlabs/bin/puppet labadmin@puppet-client1:~$ puppet --version 4.4.2 labadmin@puppet-client1:~$ sudo ps -ef | grep puppet [sudo] password for labadmin: avahi 3483 1 0 Apr27 ? 00:00:00 avahi-daemon: running [puppet-client1.local] root 4261 1 0 12:03 ? 00:00:02 /opt/puppetlabs/puppet/bin/ruby /opt/puppetlabs/puppet/bin/puppet agent labadmin 6185 1 0 18:37 ? 00:00:03 /opt/puppetlabs/puppet/bin/ruby /opt/puppetlabs/bin/puppet agent --server puppet.sunny.net labadmin 6838 4453 0 21:44 pts/0 00:00:00 grep --color=auto puppet labadmin@puppet-client1:~$ #start puppet agentlabadmin@puppet-client1:$sudo /opt/puppetlabs/bin/puppet resource service puppet ensure=running enable=true#try this command to generate certificates $puppet agent --test

Sign certificate on server.

#list certificate. make sure you run it as 'sudo' otherwise you will not see #anything. As you see client1 certificate is waiting to be signed by server labadmin@puppet:$ sudo /opt/puppetlabs/bin/puppet cert list "puppet-client1.sunny.net" (SHA256) F1:54:9D:D0:15:E6:4C:A9:97:20:A0:A5:82:A1:82:EA:3F:0F:F8:56:36:72:EE:7E:6C:BC:5B:D3:BC:89:F3:AE #sign certificate for client1 labadmin@puppet:$ sudo /opt/puppetlabs/bin/puppet cert sign puppet-client1.sunny.net Notice: Signed certificate request for puppet-client1.sunny.net Notice: Removing file Puppet::SSL::CertificateRequest puppet-client1.sunny.net at '/etc/puppetlabs/puppet/ssl/ca/requests/puppet-client1.sunny.net.pem' #list all certificates (signed and unsigned), '+' mean signed. server certificate #is by default signed labadmin@puppet:$ sudo /opt/puppetlabs/bin/puppet cert list --all + "puppet-client1.sunny.net" (SHA256) 1D:D7:74:54:93:DE:A9:9E:95:B2:6A:83:F4:66:11:DE:BA:BA:98:70:02:50:5F:1F:66:FB:53:83:3E:67:30:9C + "puppet.sunny.net" (SHA256) 50:1A:0C:74:45:98:9A:70:8E:33:AE:70:D8:FF:6F:06:E4:F5:22:8F:97:8F:8D:4A:40:66:DF:6B:13:4F:3A:CB (alt names: "DNS:puppet", "DNS:puppet.sunny.net") labadmin@puppet:$

This completes the handshaking between server and agent. We are now ready to write our first puppet manifest. We will start with default manifest ‘site.pp’ in this directory /etc/puppetlabs/code/environments/production/manifests.

Create file site.pp and add these contents in the file

labadmin@puppet:/etc/puppetlabs/code/environments/production/manifests$ cat site.pp

node default {}

node "puppet-client1" {

file {"/home/labadmin/hello-puppet.txt":

ensure => "file",

owner => "labadmin",

content => "Congratulations! you have created a test file using puppet\n",

}

}

This is a simple manifest with file resource for client1 (hostname puppet-client1). In the file resource we are looking for file ‘hello-puppet.txt’ under directory /home/labadmin, we desire it to be file, owner ‘labadmin’ and its contents ‘Congratulations! you have created a test file using puppet’. If this file is not present in agent, puppet will create it. If file is present but contents doesn’t match, puppet will update contents

Validate our manifest for any syntax. Run this command on server

puppet parser validate site.pp

First make sure our resources in client1 are not in state as desired in manifest.

#no file in /home/labadmin labadmin@puppet-client1:~$ pwd /home/labadmin labadmin@puppet-client1:~$ ls examples.desktop puppetlabs-release-pc1-trusty.deb

Now let’s pull client1 configuration from server and check resource state. First do a dry run

#do a dry run labadmin@puppet-client1:~$ puppet agent --server=puppet --onetime --no-daemonize --verbose --noop Info: Using configured environment 'production' Info: Retrieving pluginfacts Info: Retrieving plugin Info: Applying configuration version '1462070893' Notice: /Stage[main]/Main/Node[puppet-client1]/File[/home/labadmin/hello-puppet.txt]/ensure: current_value absent, should be file (noop) Notice: Node[puppet-client1]: Would have triggered 'refresh' from 1 events Notice: Class[Main]: Would have triggered 'refresh' from 1 events Notice: Stage[main]: Would have triggered 'refresh' from 1 events Notice: Applied catalog in 0.10 seconds labadmin@puppet-client1:~$ #run puppet to pull configuration file. labadmin@puppet-client1:~$ puppet agent --test #check is file been created with right contents labadmin@puppet-client1:~$ cat hello-puppet.txt Congratulations! you have created a test file using puppet labadmin@puppet-client1:~$

Update manifest to change file mode.

#add mode field in the manifest file 'site.pp'

node default {}

node "puppet-client1" {

file {"/home/labadmin/hello-puppet.txt":

ensure => "file",

owner => "labadmin",

mode => "666",

content => "Congratulations! you have created a test file using puppet\n",

}

}

#do dry run on client1

labadmin@puppet-client1:~$ puppet agent --server=puppet --onetime --no-daemonize --verbose --noop

Info: Using configured environment 'production'

Info: Retrieving pluginfacts

Info: Retrieving plugin

Info: Applying configuration version '1462108972'

Notice: /Stage[main]/Main/Node[puppet-client1]/File[/home/labadmin/hello-puppet.txt]/mode: current_value 0644, should be 0666 (noop)

Notice: Node[puppet-client1]: Would have triggered 'refresh' from 1 events

Notice: Class[Main]: Would have triggered 'refresh' from 1 events

Notice: Stage[main]: Would have triggered 'refresh' from 1 events

Notice: Applied catalog in 0.04 seconds

#as you can see file mode is '644'

labadmin@puppet-client1:~$ ls -la hello-puppet.txt

-rw-r--r-- 1 labadmin labadmin 59 Apr 30 21:53 hello-puppet.txt

#pull configuration from server

labadmin@puppet-client1:~$ puppet agent --test

Info: Using configured environment 'production'

Info: Retrieving pluginfacts

Info: Retrieving plugin

Info: Caching catalog for puppet-client1.sunny.net

Info: Applying configuration version '1462109031'

Notice: /Stage[main]/Main/Node[puppet-client1]/File[/home/labadmin/hello-puppet.txt]/mode: mode changed '0644' to '0666'

Notice: Applied catalog in 0.03 seconds

#as you can see file mode changed to '666'

labadmin@puppet-client1:~$ ls -la hello-puppet.txt

-rw-rw-rw- 1 labadmin labadmin 59 Apr 30 21:53 hello-puppet.txt

labadmin@puppet-client1:~$

Delete file

#update desire state to 'absent'

node default {}

node "puppet-client1" {

file {"/home/labadmin/hello-puppet.txt":

ensure => "absent",

owner => "labadmin",

mode => "666",

content => "Congratulations! you have created a test file using puppet\n",

}

}

#

labadmin@puppet-client1:~$ ls -la hello-puppet.txt

-rw-rw-rw- 1 labadmin labadmin 59 Apr 30 21:53 hello-puppet.txt

#do a dry run

labadmin@puppet-client1:~$ puppet agent --server=puppet --onetime --no-daemonize --verbose --noop

Info: Using configured environment 'production'

Info: Retrieving pluginfacts

Info: Retrieving plugin

Info: Applying configuration version '1462196853'

Notice: /Stage[main]/Main/Node[puppet-client1]/File[/home/labadmin/hello-puppet.txt]/ensure: current_value file, should be absent (noop)

Notice: Node[puppet-client1]: Would have triggered 'refresh' from 1 events

Notice: Class[Main]: Would have triggered 'refresh' from 1 events

Notice: Stage[main]: Would have triggered 'refresh' from 1 events

Notice: Applied catalog in 0.04 seconds

#pull configuration from server

labadmin@puppet-client1:~$ puppet agent --test

Info: Using configured environment 'production'

Info: Retrieving pluginfacts

Info: Retrieving plugin

Info: Caching catalog for puppet-client1.sunny.net

Info: Applying configuration version '1462196909'

Info: Computing checksum on file /home/labadmin/hello-puppet.txt

Info: /Stage[main]/Main/Node[puppet-client1]/File[/home/labadmin/hello-puppet.txt]: Filebucketed /home/labadmin/hello-puppet.txt to puppet with sum 3b70fe8cd273dfaec69f4356bee53529

Notice: /Stage[main]/Main/Node[puppet-client1]/File[/home/labadmin/hello-puppet.txt]/ensure: removed

Notice: Applied catalog in 0.07 seconds

#as you can see file has been deleted from client1

labadmin@puppet-client1:~$ ls -l

total 24

drwxr-xr-x 2 labadmin labadmin 4096 Apr 29 17:15 Desktop

-rw-r--r-- 1 labadmin labadmin 8980 Apr 27 17:58 examples.desktop

-rw-r--r-- 1 root root 5244 Apr 19 17:53 puppetlabs-release-pc1-trusty.deb

labadmin@puppet-client1:~$

References

https://docs.puppet.com/puppet/latest/reference/type.html#package-attribute-root