In Lab-35 I went over Docker Swarm, which is a container orchestration framework from Docker. In this lab I will go over another orchestration framework called Kubernetes. Kubernetes is an open source platform developed by Google. It provides orchestration for Docker and other types of containers

The purpose of this lab is to get familiar with Kubernetes, install it on Linux and deploy a simple Kubernetes pods. This lab is a multi-node deployment of Kubernetes cluster.

Let’s get familiar with Kubernetes terminology

Master:

A Master is a VM or a physical computer responsible for managing the cluster. The master coordinates all activities in your cluster, such as scheduling applications, maintaining applications’ desired state, scaling applications, and rolling out new updates.

By default pods are not scheduled on Master. But if you like to schedule pods on Master try this command on Master

# kubectl taint nodes --all dedicated-

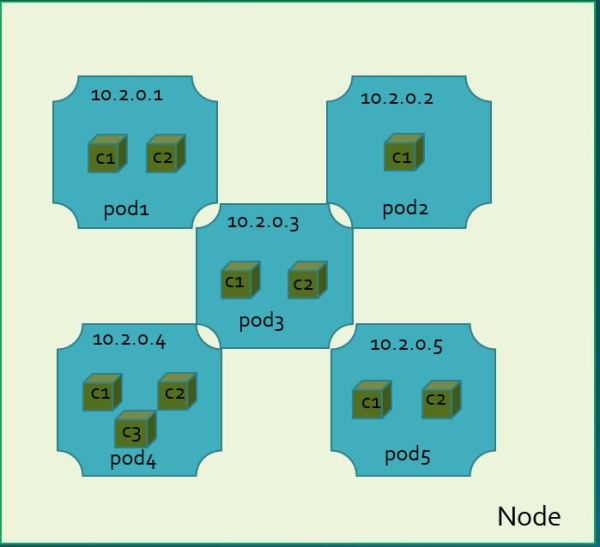

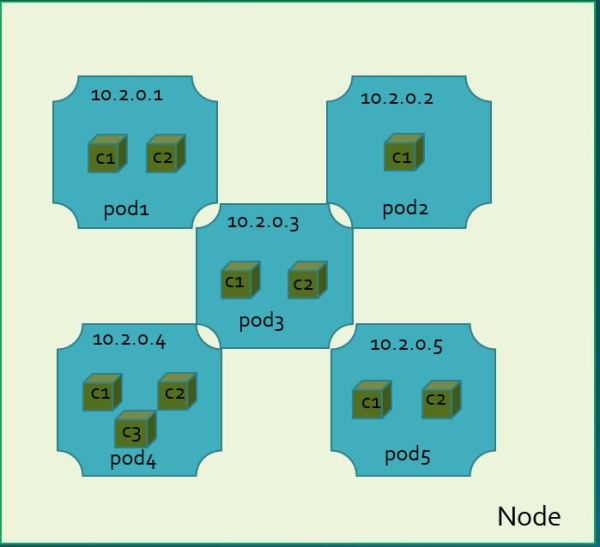

Node:

A node is a VM or a physical computer that serves as a worker machine in a Kubernetes cluster. Each node has a Kubelet, which is an agent for managing the node and communicating with the Kubernetes master. The node should also have tools for handling container operations, such as Docker.

Pod:

A pod is a group of one or more containers. All the containers in a pod scheduled together, live together and die together. Why Kubernetes deploy pod and not containers because some applications are tightly coupled and make sense to deploy together i.e. web server and cache server. You can have separate containers for web server and cache server but deploy them together that way you make sure they are scheduled together on the same node and terminated together.It is easier to manage pod than containers. Read about pod here

Pod has similarity to VM in terms on process virtualization , both run multiple processes (in case of pod containers), all processes share same IP address, all processes can communicate using local host and they use separate network namespace then host

Some key points about pod:

- Containers is a pod are always co-located and co-scheduled, and run in a shared context

- Pod contains one or more application containers which are relatively tightly coupled — in a pre-container world, they would have executed on the same physical or virtual machine

- The shared context of a pod is a set of Linux namespaces, cgroups, and potentially other facets of isolation – the same things that isolate a Docker container

- Containers within a pod share an IP address, port space and hostname. Container within pod communicate using localhost

- Every pod get an IP address

Below example of pod deployment in a node.

Replication controller

Replication controller in Kubernetes is responsible for replicating pods. A ReplicationController ensures that a specified number of pod “replicas” are always running at any one time. It checks pod’s health and if a pod dies it quickly re-creates it automatically

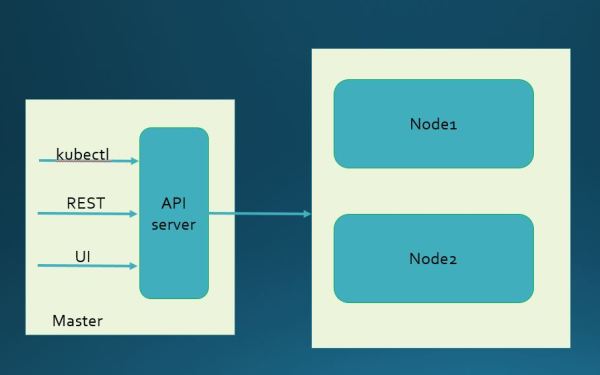

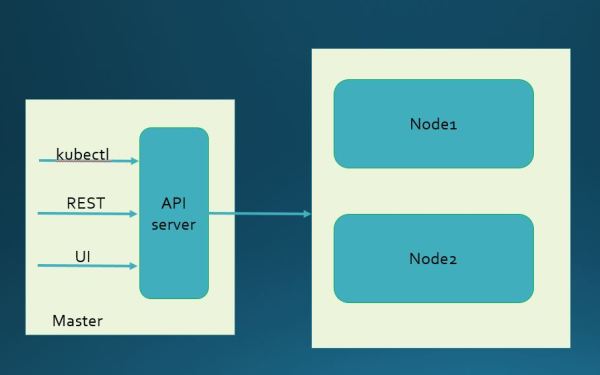

API server

Kubernetes deploy API server on Master. API server provides front end to cluster. It serves REST services. You can interact with cluster using 1) cli (kubectl) 2)REST API 3)gui interface. kubectl & GUI internally uses REST API

Prerequisite:

In this lab I am using my 3 node vagrant infrastructure. Check Lab-41 for detail how to setup VMs in vagrant. I have one Master and two Nodes. This is my VM topology

My VM specification

[root@Master ~]# cat /etc/*release*

CentOS Linux release 7.2.1511 (Core)

Derived from Red Hat Enterprise Linux 7.2 (Source)

NAME="CentOS Linux"

VERSION="7 (Core)"

ID="centos"

ID_LIKE="rhel fedora"

VERSION_ID="7"

PRETTY_NAME="CentOS Linux 7 (Core)"

ANSI_COLOR="0;31"

CPE_NAME="cpe:/o:centos:centos:7"

HOME_URL="https://www.centos.org/"

BUG_REPORT_URL="https://bugs.centos.org/"

CENTOS_MANTISBT_PROJECT="CentOS-7"

CENTOS_MANTISBT_PROJECT_VERSION="7"

REDHAT_SUPPORT_PRODUCT="centos"

REDHAT_SUPPORT_PRODUCT_VERSION="7"

CentOS Linux release 7.2.1511 (Core)

CentOS Linux release 7.2.1511 (Core)

cpe:/o:centos:centos:7

[root@Master ~]# uname -r

3.10.0-327.el7.x86_64

Procedure:

Fire up the VMs

$vagrant up

Note: There is an issue when running Kubernetes in vagrant VM environment. By default kubeadm script picks vagrant NAT interface (eth0:IP 10.0.2.15) but we need it to pick second interface (eth1) on which Master and Node communicates. In order to force kubeadm to pick eth1 interface edit your /etc/hosts file so hostname -i returns VM IP address

[root@Master ~]# cat /etc/hosts

192.168.11.11 Master

[root@Master ~]# hostname -i

192.168.11.11

[root@Master ~]#

Try these steps on all VMs (Master and Nodes). I am following installation instruction from official Kubernetes site. It uses kubeadm to install Kubernetes.

//create file kubernetes.repo in this directory /etc/yum.repos.d

[root@Master ~]# cat /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://yum.kubernetes.io/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg

https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

//disable SELinux

[root@Master ~]# sudo setenforce 0

[root@Master ~]# sudo yum install -y docker kubeadm kubelet kubectl kubernetes-cni

[root@Master ~]# sudo systemctl enable docker && systemctl start docker

[root@Master ~]# sudo systemctl enable kubelet & systemctl start kubelet

Initialize Master

Try below step on Master only. This command will initialize master. You can allow kubeadm to pick IP address or specify it explicitly which I am doing here. This is the IP address of my Master machine’s eth1 interface. Make sure Nodes can reach Master on this address

At the end of this command it will provide join command for Nodes

//this command may take couple of minutes

[root@Master ~]# sudo kubeadm init --api-advertise-addresses 192.168.11.11

[kubeadm] WARNING: kubeadm is in alpha, please do not use it for production clusters.

[preflight] Running pre-flight checks

[init] Using Kubernetes version: v1.5.3

[tokens] Generated token: "084173.692e29a481ef443d"

[certificates] Generated Certificate Authority key and certificate.

[certificates] Generated API Server key and certificate

[certificates] Generated Service Account signing keys

[certificates] Created keys and certificates in "/etc/kubernetes/pki"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/kubelet.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/admin.conf"

[apiclient] Created API client, waiting for the control plane to become ready

[apiclient] All control plane components are healthy after 51.082637 seconds

[apiclient] Waiting for at least one node to register and become ready

[apiclient] First node is ready after 1.017582 seconds

[apiclient] Creating a test deployment

[apiclient] Test deployment succeeded

[token-discovery] Created the kube-discovery deployment, waiting for it to become ready

[token-discovery] kube-discovery is ready after 30.503718 seconds

[addons] Created essential addon: kube-proxy

[addons] Created essential addon: kube-dns

Your Kubernetes master has initialized successfully!

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

http://kubernetes.io/docs/admin/addons/

You can now join any number of machines by running the following on each node:

kubeadm join --token=084173.692e29a481ef443d 192.168.11.11

//keep note of kubeadm join from above command, you need to run this command on

Nodes to join Master

kubeadm join --token=084173.692e29a481ef443d 192.168.11.11

Deploy POD network

Try this command only on master. Note: As per Kubernetes installation instructions this step needs to be performed before Node join

[root@Master ~]# kubectl apply -f https://git.io/weave-kube

Once a pod network has been installed, you can confirm that it is working by checking that the kube-dns pod is Running in the output of kubectl get pods --all-namespaces.

And once the kube-dns pod is up and running, you can continue by joining your nodes

Join the Master

Try below command on both Nodes to join the Master. This command will start kubelet in Nodes

[root@Node1 ~]# kubeadm join --token=084173.692e29a481ef443d 192.168.11.11

[kubeadm] WARNING: kubeadm is in alpha, please do not use it for production clusters.

[preflight] Running pre-flight checks

[preflight] Starting the kubelet service

[tokens] Validating provided token

[discovery] Created cluster info discovery client, requesting info from "http://192.254.211.168:9898/cluster-info/v1/?token-id=084173"

[discovery] Cluster info object received, verifying signature using given token

[discovery] Cluster info signature and contents are valid, will use API endpoints [https://192.254.211.168:6443]

[bootstrap] Trying to connect to endpoint https://192.168.11.11:6443

[bootstrap] Detected server version: v1.5.3

[bootstrap] Successfully established connection with endpoint "https://192.168.11.11:6443"

[csr] Created API client to obtain unique certificate for this node, generating keys and certificate signing request

[csr] Received signed certificate from the API server:

Issuer: CN=kubernetes | Subject: CN=system:node:Minion_1 | CA: false

Not before: 2017-02-15 22:24:00 +0000 UTC Not After: 2018-02-15 22:24:00 +0000 UTC

[csr] Generating kubelet configuration

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/kubelet.conf"

Node join complete:

* Certificate signing request sent to master and response

received.

* Kubelet informed of new secure connection details.

Run 'kubectl get nodes' on the master to see this machine join.

Let’s check what Kubernetes processes have started on Master

//these are the kubernetes related processes are running on Master

[root@Master ~]# netstat -pan

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 127.0.0.1:2379 0.0.0.0:* LISTEN 21274/etcd

tcp 0 0 127.0.0.1:10251 0.0.0.0:* LISTEN 21091/kube-schedule

tcp 0 0 127.0.0.1:10252 0.0.0.0:* LISTEN 21540/kube-controll

tcp 0 0 127.0.0.1:2380 0.0.0.0:* LISTEN 21274/etcd

tcp 0 0 127.0.0.1:8080 0.0.0.0:* LISTEN 21406/kube-apiserve

tcp 0 0 0.0.0.0:6783 0.0.0.0:* LISTEN 4820/weaver

tcp 0 0 127.0.0.1:6784 0.0.0.0:* LISTEN 4820/weaver

tcp 0 0 127.0.0.1:10248 0.0.0.0:* LISTEN 20432/kubelet

//all kube-system pods are running which is a good sign

[root@Master ~]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system dummy-2088944543-hp7nl 1/1 Running 0 1d

kube-system etcd-master 1/1 Running 0 1d

kube-system kube-apiserver-master 1/1 Running 0 1d

kube-system kube-controller-manager-master 1/1 Running 0 1d

kube-system kube-discovery-1769846148-qtjkn 1/1 Running 0 1d

kube-system kube-dns-2924299975-15b4q 4/4 Running 0 1d

kube-system kube-proxy-9rfxv 1/1 Running 0 1d

kube-system kube-proxy-qh191 1/1 Running 0 1d

kube-system kube-proxy-zhtlg 1/1 Running 0 1d

kube-system kube-scheduler-master 1/1 Running 0 1d

kube-system weave-net-bc9k9 2/2 Running 11 1d

kube-system weave-net-nx7t0 2/2 Running 2 1d

kube-system weave-net-ql04q 2/2 Running 11 1d

[root@Master ~]#

As you can see Master and Nodes are in ready state. Cluster is ready to deploy pods

[root@Master ~]# kubectl get nodes

NAME STATUS AGE

master Ready,master 1h

node1 Ready 1h

node2 Ready 1h

[root@Master ~]# kubectl cluster-info

Kubernetes master is running at http://Master:8080

KubeDNS is running at http://Master:8080/api/v1/proxy/namespaces/kube-system/services/kube-dns

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

//check components status. everything looks healthy here

[root@Master ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health": "true"}

Kubernetes version

[root@Master ~]# kubectl version

Client Version: version.Info{Major:"1", Minor:"5", GitVersion:"v1.5.1", GitCommit:"82450d03cb057bab0950214ef122b67c83fb11df", GitTreeState:"clean", BuildDate:"2016-12-14T00:57:05Z", GoVersion:"go1.7.4", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"5", GitVersion:"v1.5.3", GitCommit:"029c3a408176b55c30846f0faedf56aae5992e9b", GitTreeState:"clean", BuildDate:"2017-02-15T06:34:56Z", GoVersion:"go1.7.4", Compiler:"gc", Platform:"linux/amd64"}

[root@Master ~]#

Kubernetes UI

As I said earlier there are 3 ways to interact with your cluster. Let’s try UI interface I am following procedure specified here

Try below command to check if Kubernetes dashboard already installed in Master

[root@Master ~]# kubectl get pods --all-namespaces | grep dashboard

If it is not installed as in my case try below command to install Kubernetes dashboard

[root@Master ~]# kubectl create -f https://rawgit.com/kubernetes/dashboard/master/src/deploy/kubernetes-dashboard.yaml

deployment "kubernetes-dashboard" created

service "kubernetes-dashboard" created

Try below to setup proxy .kubectl will handle authentication with apiserver and make Dashboard available at http://localhost:8001/ui.

[root@Master ~]# kubectl proxy &

Starting to serve on 127.0.0.1:8001

Open a browser and point it to http://localhost.8001/ui. You should get Kubernetes dashboard UI like this. You can check your cluster status, deploy pod in cluster using ui

Deploy pod

Let’s deploy pod using kubectl cli. I am using yaml template. Create below template in your Master. My template file name is single_container_pod.yaml

This template will deploy a pod with one container, in this case a nginx server. I named my pod web-server and exposed container port 8000

[root@Master]# kubectl create -f single_conatiner_pod.yaml

[root@Master ~]# cat single_container_pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: web-server

labels:

app: web-server

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 8000

Create pod using above template

[root@Master ~]# kubectl create -f single_container_pord.yaml

pod "web-server" created

//1/1 mean this pod is running with one container

[root@Master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

web-server 1/1 Running 0 47s

[root@Master ~]# kubectl describe pod web-server

Name: web-server

Namespace: default

Node: node2/192.168.11.13

Start Time: Sun, 26 Feb 2017 06:29:28 +0000

Labels: app=web-server

Status: Running

IP: 10.36.0.1

Controllers: <none>

Containers:

nginx:

Container ID: docker://3b63cab5804d1842659c6424369e6b4a163b482f560ed6324460ea16fdce791e

Image: nginx

Image ID: docker-pullable://docker.io/nginx@sha256:4296639ebdf92f035abf95fee1330449e65990223c899838283c9844b1aaac4c

Port: 8000/TCP

State: Running

Started: Sun, 26 Feb 2017 06:29:30 +0000

Ready: True

Restart Count: 0

Volume Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-pdsm6 (ro)

Environment Variables: <none>

Conditions:

Type Status

Initialized True

Ready True

PodScheduled True

Volumes:

default-token-pdsm6:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-pdsm6

QoS Class: BestEffort

Tolerations: <none>

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

21s 21s 1 {default-scheduler } Normal Scheduled Successfully assigned web-server to node2

20s 20s 1 {kubelet node2} spec.containers{nginx} Normal Pulling pulling image "nginx"

19s 19s 1 {kubelet node2} spec.containers{nginx} Normal Pulled Successfully pulled image "nginx"

19s 19s 1 {kubelet node2} spec.containers{nginx} Normal Created Created container with docker id 3b63cab5804d; Security:[seccomp=unconfined]

19s 19s 1 {kubelet node2} spec.containers{nginx} Normal Started Started container with docker id 3b63cab5804d

//this command tells you on which Node pod is running. looks like our pod scheduled

in Node2

[root@Master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

web-server 1/1 Running 0 2m 10.36.0.1 node2

[root@Master ~]#

You can login to container using kubectl exec command

[root@Master ~]# kubectl exec web-server -it sh

# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

15: eth0@if16: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1376 qdisc noqueue state UP group default

link/ether ee:9d:a1:cb:db:ee brd ff:ff:ff:ff:ff:ff

inet 10.44.0.2/12 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::ec9d:a1ff:fecb:dbee/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

# env

KUBERNETES_SERVICE_PORT=443

KUBERNETES_PORT=tcp://10.96.0.1:443

HOSTNAME=web-server

HOME=/root

KUBERNETES_PORT_443_TCP_ADDR=10.96.0.1

NGINX_VERSION=1.11.10-1~jessie

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

KUBERNETES_PORT_443_TCP_PORT=443

KUBERNETES_PORT_443_TCP_PROTO=tcp

KUBERNETES_PORT_443_TCP=tcp://10.96.0.1:443

KUBERNETES_SERVICE_PORT_HTTPS=443

KUBERNETES_SERVICE_HOST=10.96.0.1

PWD=/

#

Let’s login to Node2 and check container using Docker cli

//as can be seen nginx container is running in Node2

[root@Node2 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

3b63cab5804d nginx "nginx -g 'daemon off" 4 minutes ago Up 4 minutes k8s_nginx.f4302f56_web-server_default_ec8bd607-fbec-11e6-ac27-525400225b53_2dc5c9e9

ce8cd44bd08e gcr.io/google_containers/pause-amd64:3.0 "/pause" 4 minutes ago Up 4 minutes k8s_POD.d8dbe16c_web-server_default_ec8bd607-fbec-11e6-ac27-525400225b53_85ec4303

5a20ca6bed11 weaveworks/weave-kube:1.9.0 "/home/weave/launch.s" 24 hours ago Up 24 hours k8s_weave.c980d315_weave-net-ql04q_kube-system_5f5b0916-fb1e-11e6-ac27-525400225b53_125b8d34

733d2927383f weaveworks/weave-npc:1.9.0 "/usr/bin/weave-npc" 24 hours ago Up 24 hours k8s_weave-npc.a8b5954e_weave-net-ql04q_kube-system_5f5b0916-fb1e-11e6-ac27-525400225b53_7ab4a6a7

d270cb27e576 gcr.io/google_containers/kube-proxy-amd64:v1.5.3 "kube-proxy --kubecon" 24 hours ago Up 24 hours k8s_kube-proxy.3cceb559_kube-proxy-zhtlg_kube-system_5f5b707f-fb1e-11e6-ac27-525400225b53_b38dc39e

042abc6ec49c gcr.io/google_containers/pause-amd64:3.0 "/pause" 24 hours ago Up 24 hours k8s_POD.d8dbe16c_weave-net-ql04q_kube-system_5f5b0916-fb1e-11e6-ac27-525400225b53_02af8f33

56d00c47759f gcr.io/google_containers/pause-amd64:3.0 "/pause" 24 hours ago Up 24 hours k8s_POD.d8dbe16c_kube-proxy-zhtlg_kube-system_5f5b707f-fb1e-11e6-ac27-525400225b53_56485a90

//docker nginx images loaded in Node2

[root@Node2 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

docker.io/nginx latest db079554b4d2 10 days ago 181.8 MB

gcr.io/google_containers/kube-proxy-amd64 v1.5.3 932ee3606ada 10 days ago 173.5 MB

docker.io/weaveworks/weave-npc 1.9.0 460b9ad16e86 3 weeks ago 58.22 MB

docker.io/weaveworks/weave-kube 1.9.0 568b0ac069ad 3 weeks ago 162.7 MB

gcr.io/google_containers/pause-amd64 3.0 99e59f495ffa 9 months ago 746.9 kB

Delete pod, try these commands on Master

[root@Master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

web-server 1/1 Running 0 59m

[root@Master ~]# kubectl delete pod web-server

pod "web-server" deleted

[root@Master ~]# kubectl get pods

No resources found.

Check Node2 and make sure container is deleted

//as you can see there is no nginx container running on Node2

[root@Node2 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

5a20ca6bed11 weaveworks/weave-kube:1.9.0 "/home/weave/launch.s" 24 hours ago Up 24 hours k8s_weave.c980d315_weave-net-ql04q_kube-system_5f5b0916-fb1e-11e6-ac27-525400225b53_125b8d34

733d2927383f weaveworks/weave-npc:1.9.0 "/usr/bin/weave-npc" 24 hours ago Up 24 hours k8s_weave-npc.a8b5954e_weave-net-ql04q_kube-system_5f5b0916-fb1e-11e6-ac27-525400225b53_7ab4a6a7

d270cb27e576 gcr.io/google_containers/kube-proxy-amd64:v1.5.3 "kube-proxy --kubecon" 24 hours ago Up 24 hours k8s_kube-proxy.3cceb559_kube-proxy-zhtlg_kube-system_5f5b707f-fb1e-11e6-ac27-525400225b53_b38dc39e

042abc6ec49c gcr.io/google_containers/pause-amd64:3.0 "/pause" 24 hours ago Up 24 hours k8s_POD.d8dbe16c_weave-net-ql04q_kube-system_5f5b0916-fb1e-11e6-ac27-525400225b53_02af8f33

56d00c47759f gcr.io/google_containers/pause-amd64:3.0 "/pause" 24 hours ago Up 24 hours k8s_POD.d8dbe16c_kube-proxy-zhtlg_kube-system_5f5b707f-fb1e-11e6-ac27-525400225b53_56485a90

//image remains for future use

[root@Node2 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

docker.io/nginx latest db079554b4d2 10 days ago 181.8 MB

gcr.io/google_containers/kube-proxy-amd64 v1.5.3 932ee3606ada 10 days ago 173.5 MB

docker.io/weaveworks/weave-npc 1.9.0 460b9ad16e86 3 weeks ago 58.22 MB

docker.io/weaveworks/weave-kube 1.9.0 568b0ac069ad 3 weeks ago 162.7 MB

gcr.io/google_containers/pause-amd64 3.0 99e59f495ffa 9 months ago 746.9 kB

[root@Node2 ~]#

Replication controller

Create yaml template for replication controller. You can read more about replication controller here.

This template replicating 10 pods using ‘replicas:10’.

[root@Master ~]# cat web-rc.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx

spec:

replicas: 10

selector:

app: web-server

template:

metadata:

name: web-server

labels:

app: web-server

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 8000

Execute replication controller

[root@Master ~]# kubectl create -f web-rc.yaml

replicationcontroller "nginx" created

//10 pods created

[root@Master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-498mx 1/1 Running 0 10s

nginx-9vfsd 1/1 Running 0 10s

nginx-dgvg6 1/1 Running 0 10s

nginx-fh4bv 1/1 Running 0 10s

nginx-k7j9d 1/1 Running 0 10s

nginx-mz5r0 1/1 Running 0 10s

nginx-q2z79 1/1 Running 0 10s

nginx-w6b4d 1/1 Running 0 10s

nginx-wkshq 1/1 Running 0 10s

nginx-wz7ss 1/1 Running 0 10s

[root@Master ~]# kubectl describe replicationcontrollers/nginx

Name: nginx

Namespace: default

Image(s): nginx

Selector: app=web-server

Labels: app=web-server

Replicas: 10 current / 10 desired

Pods Status: 10 Running / 0 Waiting / 0 Succeeded / 0 Failed

No volumes.

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

2m 2m 1 {replication-controller } Normal SuccessfulCreate Created pod: nginx-fh4bv

2m 2m 1 {replication-controller } Normal SuccessfulCreate Created pod: nginx-k7j9d

2m 2m 1 {replication-controller } Normal SuccessfulCreate Created pod: nginx-mz5r0

2m 2m 1 {replication-controller } Normal SuccessfulCreate Created pod: nginx-dgvg6

2m 2m 1 {replication-controller } Normal SuccessfulCreate Created pod: nginx-498mx

2m 2m 1 {replication-controller } Normal SuccessfulCreate Created pod: nginx-w6b4d

2m 2m 1 {replication-controller } Normal SuccessfulCreate Created pod: nginx-9vfsd

2m 2m 1 {replication-controller } Normal SuccessfulCreate Created pod: nginx-q2z79

2m 2m 1 {replication-controller } Normal SuccessfulCreate Created pod: nginx-wkshq

2m 2m 1 {replication-controller } Normal SuccessfulCreate (events with common reason combined)

[root@Master ~]#

Delete one pod. Since we desire 10 replicas, replication controller will restart another pod so total pods are always 10

[root@Master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-498mx 1/1 Running 0 6m

nginx-9vfsd 1/1 Running 0 6m

nginx-dgvg6 1/1 Running 0 6m

nginx-fh4bv 1/1 Running 0 6m

nginx-k7j9d 1/1 Running 0 6m

nginx-mz5r0 1/1 Running 0 6m

nginx-q2z79 1/1 Running 0 6m

nginx-w6b4d 1/1 Running 0 6m

nginx-wkshq 1/1 Running 0 6m

nginx-wz7ss 1/1 Running 0 6m

[root@Master ~]# kubectl delete pod nginx-k7j9d

pod "nginx-k7j9d" deleted

[root@Master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-498mx 1/1 Running 0 6m

nginx-74qp9 0/1 ContainerCreating 0 3s

nginx-9vfsd 1/1 Running 0 6m

nginx-dgvg6 1/1 Running 0 6m

nginx-fh4bv 1/1 Running 0 6m

nginx-mz5r0 1/1 Running 0 6m

nginx-q2z79 1/1 Running 0 6m

nginx-w6b4d 1/1 Running 0 6m

nginx-wkshq 1/1 Running 0 6m

nginx-wz7ss 1/1 Running 0 6m

[root@Master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-498mx 1/1 Running 0 6m

nginx-74qp9 1/1 Running 0 6s

nginx-9vfsd 1/1 Running 0 6m

nginx-dgvg6 1/1 Running 0 6m

nginx-fh4bv 1/1 Running 0 6m

nginx-mz5r0 1/1 Running 0 6m

nginx-q2z79 1/1 Running 0 6m

nginx-w6b4d 1/1 Running 0 6m

nginx-wkshq 1/1 Running 0 6m

nginx-wz7ss 1/1 Running 0 6m

[root@Master ~]#

Increase and decrease number of replicas

[root@Master ~]# kubectl scale --replicas=15 replicationcontroller/nginx

replicationcontroller "nginx" scaled

//increase number of replicas to 15

[root@Master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-1jdn9 1/1 Running 0 7s

nginx-498mx 1/1 Running 0 17m

nginx-74qp9 1/1 Running 0 11m

nginx-9vfsd 1/1 Running 0 17m

nginx-bgdc6 1/1 Running 0 7s

nginx-dgvg6 1/1 Running 0 17m

nginx-fh4bv 1/1 Running 0 17m

nginx-j2xtf 1/1 Running 0 7s

nginx-m8vlq 1/1 Running 0 7s

nginx-mz5r0 1/1 Running 0 17m

nginx-q2z79 1/1 Running 0 17m

nginx-rmrqt 1/1 Running 0 7s

nginx-w6b4d 1/1 Running 0 17m

nginx-wkshq 1/1 Running 0 17m

nginx-wz7ss 1/1 Running 0 17m

[root@Master ~]#

[root@Master ~]# kubectl scale --replicas=5 replicationcontroller/nginx

replicationcontroller "nginx" scaled

[root@Master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-9vfsd 1/1 Running 0 19m

nginx-dgvg6 1/1 Running 0 19m

nginx-fh4bv 1/1 Running 0 19m

nginx-mz5r0 0/1 Terminating 0 19m

nginx-q2z79 1/1 Running 0 19m

nginx-w6b4d 1/1 Running 0 19m

[root@Master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-9vfsd 1/1 Running 0 19m

nginx-dgvg6 1/1 Running 0 19m

nginx-fh4bv 1/1 Running 0 19m

nginx-q2z79 1/1 Running 0 19m

nginx-w6b4d 1/1 Running 0 19m

[root@Master ~]#

Note: I found that after vagrant VMs shutdown and restarts things doesn’t work properly. I see API server doesn’t come up. Kubernetes documentation explain these steps to restart you cluster if you get into problem. I tried it but only able to bring up cluster with one Node

Reset cluster. Perform below on all VMs

#kubeadm reset

Redo steps

#systemctl enable kubelet && systemctl start kubelet

#kubeadm init --api-advertise-addresses 192.168.11.11

#kubectl apply -f https://git.io/weave-kube

#kubeadm join

Save

Save

Save